Avoiding bumps on the road: how thermal imaging can improve the safety of autonomous vehicles

Autonomous driving has recently witnessed several setbacks, with at least three major accidents in 2018 to date. But infrared imaging, also known as thermal imaging, holds great promise of decreasing the likelihood of certain kinds of accidents occurring again.

Autonomous driving is no longer a novel concept proposed only in science fiction. With the advent of cost-effective sensors and improved computing capabilities, it has become a quickly-developing industry that is seeing exponential growth. It is estimated that by the 2030s, fully autonomous vehicles will be a common occurrence on the road. This disruptive technology will have lasting impacts on today’s economy and lifestyle.

Dangers on the Road to Autonomy

For fully autonomous driving to reach levels of adoption where it can start disrupting, a few technical hurdles must first be cleared. Full detection of a vehicle’s surroundings must be attainable, which requires sensor fusion – the automatic combining of multiple imaging modalities. Typical sensors used to accomplish this in current autonomous vehicle designs are radar, LIDAR, ultrasonic, and charge-coupled device (CCD) cameras. With these sensors, full environmental detection should be possible. But as so often happens, theory and practice diverge: current detection system architectures have had several major failures in 2018 already. On January 22nd and March 23rd, Tesla vehicles on autopilot setting struck a stationary fire truck and a concrete lane divider respectively, with the latter resulting in the death of the driver. On March 18th, an Uber self-driving Volvo – equipped with radar, LIDAR, and CCD cameras – struck a pedestrian crossing a road in Arizona. The deadly collision was caused by limited nighttime visibility and the radar and LIDAR systems failing to properly detect the crossing pedestrian. The detection rate of this system would have been improved, and the pedestrian’s life likely saved, if only it were equipped with an infrared thermal imaging camera.

Why Infrared/Thermal Imaging?

Infrared (IR) is one of the seven types of electromagnetic radiation. All objects with temperatures above absolute zero emit infrared light and the intensity of this light is dependent on the object’s temperature. The higher the object’s temperature, the more IR it will emit. Thermal imaging takes advantage of IR radiation by taking the “invisible” light and converting it into a visible spectrum perceivable by the human eye. This image could then be used for detecting and classifying objects in a similar manner to the detection and classification methods used by CCD cameras.

Infrared can be leveraged in several ways to improve existing autonomous sensory systems:

- Warm-bodied objects exhibit high contrast in cold environments, making them easily identifiable

- There is no dependence on visible light with better performance during nighttime applications

- Infrared provides more detail on objects than LIDAR and radar

- There are no blooming effects from lights in the camera’s field of view (FOV)

- There are minimal effects on the image from shadows.

A direct comparison of a CCD camera image and IR image taken from a vehicle is shown below in Fig. 1. Where thermal imaging falls short is: its high cost– which could be lowered with economies of scale; low levels of image detail; low image information content when no warm bodies are present in an image; and the image appearance and associated algorithm is dependent on weather and time-of-day conditions.

The lower image shows all of the relatively warm areas in front of the vehicle. By comparing these two images, four pedestrians can be quickly located by inspecting all warm spots. Any warm profile that has a vague figure can be confirmed as a pedestrian by comparing it directly to the CCD image, and any shape that may appear to be a pedestrian in the CCD image can be confirmed by matching them with a warm spot in the IR image. In this scenario, the CCD and IR images could be described as redundant as both are used to locate a pedestrian. This is the concept of sensor fusion in practice: this imaging redundancy is beneficial, as the information content from both images is different and can be used to detect and classify targets with a higher probability.

Getting from Sensing to Detecting

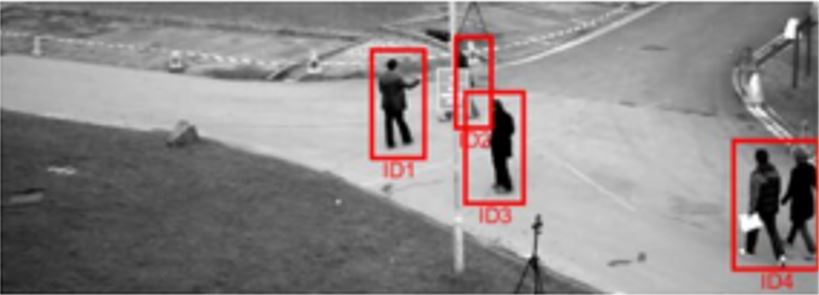

Detecting objects in an image is a separate problem from classification. Detection is done by determining whether there is an object of interest within the frame and enclosing that object within a shape (often a rectangular box is used). An example of is shown in Fig. 2. This detection was done on a CCD image using a frame difference method called background subtraction. The detections shown are imperfect, as the pair of pedestrians in the bottom right have been assigned as one object.

The object classification discussed for Fig. 1 is a trivial process for a human inspecting the images. The problem is not trivial for a computer tasked with identifying the objects, though. People learn characteristics of their surroundings from birth and can classify objects quickly based on years of experience and exposure to such things. In machine vision, this classification cannot be done unless the algorithm has been trained to recognize distinct images (or image features). For example, in the case of a pedestrian, there are infinite orientations in which they can appear in an image. A pedestrian could be wearing different clothes, holding bags, partially occluded, walking behind another pedestrian, etc. Extending this problem to all living things becomes a very large problem. Classification algorithms can be trained to address this problem. Once detection of an object has been made, the object needs to be labelled with a classification. One way to automate this is to use a neural network (NN). In the simplest sense, NNs perform pattern recognition on an input and return the likelihood of that input being something from the training dataset. In order to train a NN, one must first take a labelled dataset – in this case, labelled detections – and feed it through the NN, which will, in short, cause the NN to associate certain images with certain labels, which can then be compared to results from other sensors and improve detection rates. This detection and classification method is a more simplistic method of how it can be done. Many algorithms compute both the detection and classification simultaneously now using a type of neural network called a convolutional neural network (CNN). These types of networks take advantage of mid-level features rather than the low-level features that a detection then classification algorithm may use.

Infrared imaging offers additional information to existing sensory systems in autonomous driving. The additional thermal information is necessary to detect and classify a target or confirm whether an already classified target from another sensor is likely to be the assigned classification. By implementing IR imaging in autonomous driving, environmental information content can increase and accidents like the death of the pedestrian in Arizona can be avoided.

Keys to convenience: the technologies driving autonomous vehicles today

Keys to convenience: the technologies driving autonomous vehicles today  Dark Busters: Revealing Hidden Threats with Uncooled IR Detectors

Dark Busters: Revealing Hidden Threats with Uncooled IR Detectors