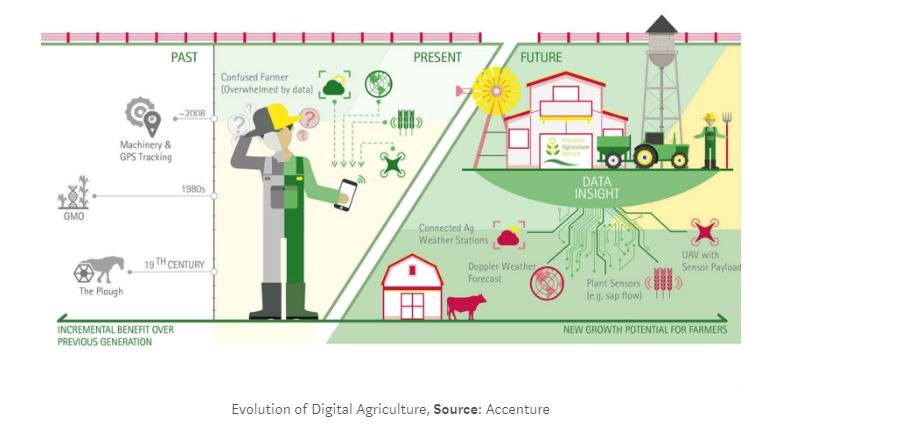

Progression of Precision Agriculture

Even though it could be considered our oldest industry, agriculture has probably changed more in the last 100 years than in the previous 10,000.

Yielding More with Technology: A Brief History of Precision Agriculture

Even though it could be considered our oldest industry, agriculture has probably changed more in the last 100 years than in the previous 10,000. In the United States, for example, the farm population peaked in 1916, with 32% of the country’s 101.6 million people. Today the United States population is more than three times larger, only 12% live in rural areas and only 2% of the population produces food for consumption. Fewer farmers are able to produce much more food than any time in our history. The nature of food production has changed dramatically, with the agricultural industry increasingly relying on technology to meet the food needs of the world’s growing population.

Taking the guesswork out of the harvest

Before the agricultural industry embraced the technologies that enabled it to maximize yield and minimize costs, farmers traditionally had to rely on a large amount of judgment and guesswork when making farming decisions. Farmers faced some key challenges with this form of decision-making. For example, without concrete data available on the water or nutrient needs of crops, farmers were usually applying more than the needed amount. Additionally, farmers had to endure the time-consuming task of physically inspecting crops across hundreds of acres to determine any issues. Factors like these provided technology companies with an opportunity to satisfy an unmet need—providing farmers with technology that will enable them to collect accurate and precise data to ease their decision-making processes. And this is when the concept of precision agriculture was conceived.

Precision agriculture is a farm management concept that is focused on managing crop production inputs— like seeds, water, fertilizers and pesticides on a site-specific basis to increase profits, reduce waste and maintain environmental quality. Since its inception in the 1960s, the main goal of precision agriculture was to equip farmers with the tools necessary to make the right farming decisions, at the right location, at the right time, and at the right intensity.

Precision agriculture is considered a key component of the third wave of the modern agricultural revolution, which is also referred to as the green revolution. Between the 1930s and late 1960s, there was an increase in technology transfer initiatives to increase agricultural production worldwide. The focus was on spreading technologies that already existed, but had not been widely implemented for agricultural purposes into the industry. Some of the key information technology and geospatial tools that were transferred to the agricultural industry during this time period were geographical information systems (GIS), general positioning systems (GPS), and yield mapping systems. Additionally, the “Green Revolution” was driven by the adoption of new initiatives like the use of chemical fertilizers and agro-chemicals, controlled water-supplies, and the use of high-yielding crop varieties. These new practices were all driven by the need for data-based decision-making, and replaced the ‘traditional’ approach civilization has relied on for thousands of years.

Merging Technology with Farming

The birth of precision agriculture has often been linked to the introduction of GIS technology to the agricultural industry in the 1980s. Before the 1980s, GIS had no commercial applications and was mainly reserved for research institutions. However, as sizes of farms continued to grow, it became increasingly difficult for farmers to collect geographic data to make farming decisions. GIS technology was therefore essential because it allowed farmers to digitally map out their farms and collect geographical data which could be used to make planting decisions based on certain factors like soil type, rainfall, topography and more. GIS was an important farming tool during this time period because it provided farmers with a better alternative to recording data on paper.

In the early 1990s, general positioning systems (GPS) were introduced to agriculture. Initially developed in 1973 to facilitate troop movement, GPS wasn’t available for commercial use until 1995. When GPS was first introduced to agriculture, it was sold at a very high cost with limited functionality. The GPS available at that time could only provide farmers with accurate data within 10-100m. Even with the limited capabilities of these systems, farmers who adopted this technology found it useful for navigation purposes, and later on for yield mapping/ monitoring— a process in agriculture whereby GPS data is used to analyze certain key variables like crop yield and moisture content.

Developed in the early 1990s, yield mapping is considered one of the key technologies in precision agriculture. Rather than having to manually calculate the number of crops per acre by weighing the amount of yield, yield mappers— which consisted of sensors, a combine, and a computer— allowed farmers to record crop yield in real time. As a combine moved through the field, a yield monitor tracked how many bushels were being harvested per acre. This technology allowed farmers to compare yield distribution within the field year-over-year to determine which fields needed more irrigation, or identify fields that were producing very low to no crops. Out of all the precision agriculture tools first introduced, yield mapping systems were the most popular, due to their simplicity to understand and operate.

The grass wasn’t always greener on the other side

Precision agriculture tools make farming practices more efficient, reduce misapplication of products, and increase crop and farming efficiency. And like any major technological change, precision agriculture technologies were met with a lot of skepticism and controversy. Farmers were skeptical about the benefits these proposed technologies promised to provide. Additionally, a lack of understanding of the technologies and a lack of support from the manufacturers created a situation where farmers were hesitant to adopt these new systems. Producers left farmers to learn how to operate these systems and troubleshoot all of the problems they encountered. This created a huge learning curve for the early adopters and discouraged others from adopting these new tools. Moreover, for some of the precision agricultural tools like GPS, different companies usually created the hardware and software components. This resulted in a lack of compatibility between the hardware and software supplied by producers. The early adopters of GPS also struggled with getting strong GPS positions. Given the high cost and complexities of GPS technology, many farmers were not as open to purchasing them.

During the late 1990s, the agricultural industry reached somewhat of a plateau. Farmers were tired of experimenting with new technologies, encountering numerous bugs and being unable to process the data they collected due to problems with the software. The lack of training manuals and personnel to provide technical support was also a major issue for early adopters. This plateau put pressure on producers to develop tools that were much easier to use and understand, and to provide the needed technical support. There was also an increase in the number of producers of software and hardware, giving farmers more options based on their production goals and management objectives. This increase in producers of precision agriculture tools helped to drive down prices, making the purchase of these systems less risky and requiring less of a financial investment.

This is part 1 in a series of articles on precision agriculture. Check back soon for our next article on how advances in imaging technology allow today’s farmers to more precisely manage plant health and crop yields.

Aerial Imaging: I Fly with My Little Eye

Aerial Imaging: I Fly with My Little Eye  Multispectral Imaging and the Mona Lisa

Multispectral Imaging and the Mona Lisa