Artificial Intelligence in Medical Imaging & Diagnostics: Are we there yet?

The case for utilizing AI & ML tools in medical imaging & diagnostics

A close friend of mine was recently diagnosed with an aggressive cancer. The cancerous lesion was removed, however there was concern due to the malignancy of the cancer, that it could have spread. So they threw the book at him with regards to tests. He had an MRI to check for anomalies in his brain. There was a CT scan performed to verify the MRI imaging and to look at his lymph nodes. A Positron Emissions Test (or PET scan) was conducted to identify any additional areas that the cancer may have spread. So far so good for my friend (phew!), but this highlights the power and challenges of diagnostic imaging. Three types of imaging modalities and subsequent image analysis were used in his diagnostics. And with an aggressive cancer, this all has to be done very quickly to ensure the best outcome. All this while healthcare systems are becoming more and more overwhelmed and in some places it can be hard to schedule a MRI, let alone get the results in timely manner. One solution that has been coming down the pike for some time, is the use of Artificial Intelligence (AI) and Machine Learning (ML) to automatically assess and characterize images. The potential for these tools is substantial, but the risks, ethical concerns and regulatory hurdles are not small either.

Let’s start with what AI can do in image analysis right now.

Artificial Intelligence at its core allows machines to learn from their experience, solve problems and perform human-like tasks by being able to adjust to new inputs. When radiologists are trained they view many, many images to master the ability to diagnose a disease based on characteristics within the image. As they progress in their career and see more and more images, they will become more and more proficient in their ability to read images and correctly identify anomalies. Similarly, a (AI/or machine learning) program developed to automatically detect tumors would need to be fed many, many images to learn to decipher the data. Initially, these images would need to be of known tumors – tumors that a radiologist had already identified. These known positive images help to “train” the program. As the program sees more and more images it will become better and better at identifying features and characteristics of the target, for instance tumors of a certain size or in a certain location. The power is not just in this identification, but that with the massive amount of data available in these images, the computer will be able to detect anomalies that may not be visible to the (even very well trained) human eye.

The best example of where AI is currently being deployed might be in self-driving cars. The systems driving the car are taking in massive amounts of data including images of the surroundings, processing that data and making decisions on how the car will proceed (i.e. speed up, slow down, brake suddenly, change lanes etc). Those systems had to be trained on vast amounts of data to even begin driving in a manufactured environment where there are no unknowns (ie no actual pedestrians or other unaware drivers etc). The development of self-driving cars has been slow, partly because there is just so much complexity needed and also partly because there is very little appetite for risk from the public. AI in medical imaging faces many of the same hurdles.

What are the experts saying?

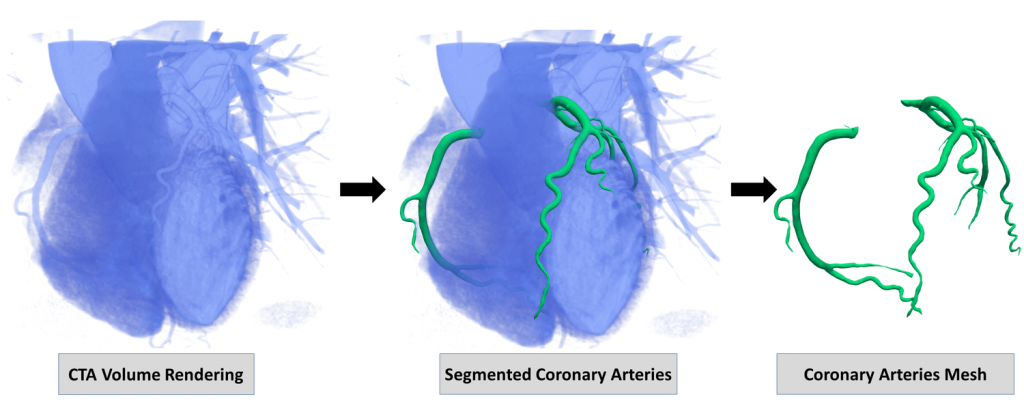

Dr. Masoom Haider is a clinician-scientist and radiologist in the Joint Department of Medical Imaging at the University of Toronto. His research focuses on mining data from CT and MRI scans to develop automated tools for diagnostics and prognostics. He told me that the human eye can only utilize a small portion of the data of each CT image, but automated computational tools can begin to unlock value in the rest of the image data unseen by the radiologist. Tools that are used in other fields quite readily have not yet made their way to the clinic, for instance automated segmentation. Segmentation techniques automatically detect an image feature. The computer can look at the image pixel by pixel, but the human eye cannot distinguish features that small. This can be very powerful when trying to detect anomalies within a busy image. I used segmentation tools daily during my work studying fruit fly embryos. I identified the feature of interest on the first image of a time course movie and the program (developed by my supervisor) could automatically follow that feature for the remainder of the images within the movie.

“Auto-pilot still has pilots in the cockpit”

This tool saved me months (if not years) in my research and let me focus on understanding the results instead of spending time tediously delineating each image. The algorithm was not foolproof, and it still required human interaction – which will be the case for medical imaging for many years to come. Dr. Haider describes the current AI tools in development as augmenting radiologists to improve efficiency. While there are researchers working on AI tools that may fully diagnose a patient, those appear to be rather distant. Partly due to the complexity in handling health data, partly due to the public discourse, and of course partly due to a need to understand and test for bias. However, assistive automated tools have started to become commercially available, like a suite of tools from Arterys that supports radiologists and physicians in areas from breast cancer to stroke detection. However, Dr. Haider still cautions that AI will never be perfect because there will always be edge cases, “auto-pilot still has pilots in the cockpit.”

Another area of intense development for AI tools is in breast cancer screening. Early-career clinician and researcher, Dr. Emily Boulos practices at the Women’s College Hospital and Mt. Sinai Hospital; she also holds an adjunct faculty position at UofT. Her clinical work focuses on breast imaging and breast cancer detection. She tells me that there are semi-automated tools currently in use to help analyze mammograms, but they are not yet capable of even preliminary diagnostics and require thorough radiologist review. Dr. Boulos sees the need for using AI & ML in image analysis and is involved in starting research studies to bring these tools to her field. Breast imaging is an ideal place to begin utilizing AI because mammograms are already very standardized; the image views are standard and there are fairly standard characteristics to the images. Additionally, there is essentially a single disease being screened for: Breast Cancer. Which is detected by the presence of a tumor/mass, tissue calcification and/or distortion in the image. Unlike other body systems where there may be a multitude of diseases or significant variation in the system itself (size and location of organs, etc.) breast imaging is more consistent. Despite that consistency, the images themselves are quite complex and interpretation can be challenging and time consuming. Dr. Boulos hopes to support advancements in AI tools to eliminate some of the complexity in breast cancer image analysis and improve diagnostics. She sees AI as the path to more specialization in radiology, a good thing when you consider the vast complexity of human anatomy.

The challenges that remain: government regulation, public opinion

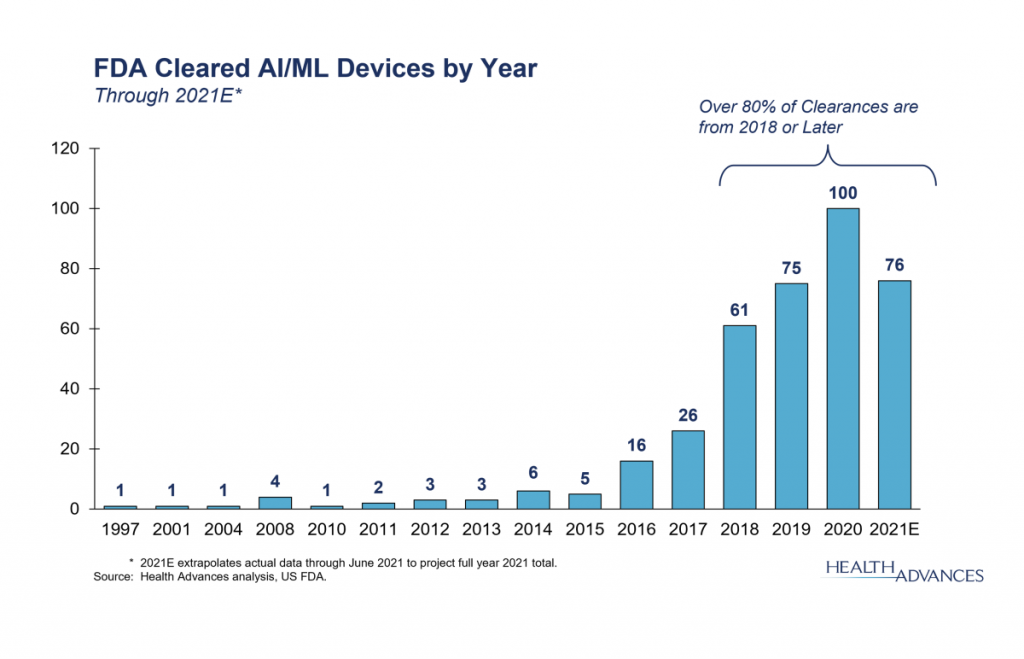

There is huge potential for improving efficiency and efficacy of care across many image-based diagnostics. Imagine the case where a patient comes to the clinic with shortness of breath and dizziness. The clinic completes a chest X-ray but the radiologist has 40 X-rays to look at that day so they may not read that patient’s X-ray until hours later. Maybe too late. What if instead there was an automated triaging tool. Software that read the image and triaged patients based on learned patterns of concern (like a blockage in the lung)? Then the radiologist could view more critical images sooner. The advantage of tools that are focused on improving efficiency is that the burden of proof is not on the efficacy. Proving efficacy is a much larger, more challenging hurdle. Even so, new tools are being FDA approved regularly in more and more medical fields. A bit over a dozen AI/ML tools were approved by the FDA for medical imaging in 2017, two dozen tools were approved in 2019 alone.

Perhaps as important as the leaps being made in AI for image analysis are the substantial advancements being made by the companies producing imaging equipment. Automated tools are being used to generate better quality images, to reduce scan time, and to reduce noise within the image. For instance, Teledyne’s Astrocyte software for anomaly detection, classification and segmentation. Natural Language Processing is being utilized to improve dictation – radiologist dictate measurements and features while they are reviewing images. Current dictation methods are often inaccurate and improvements are being made in tools that can better recognize and transcribe human speech. In 2020 Cannon Medical Systems received FDA approval for its Advanced intelligence Clear-IQ Engine, offering AI based image noise reduction. Reduction of noise within the image produces higher quality images which can help to eliminate errors in diagnostics. None of these tools can operate on its own; they are all augmentation to the current process. However, these are still critical steps towards improving efficiency and helping medical imaging deal with the massive datasets that they create each year.

One of the biggest challenges for AI in medical imaging is public opinion. We take for granted the subjective nature of medical diagnostics when a human is performing it. According to Dr. Boulos, every breast image requires some kind of judgment call – because biology is complex and the morphology and details of every image are different, though they have similar characteristics. AI tools would still be making judgment calls, ideally utilizing more data than the human eye can, but there may still be errors. And that is a sticking point; what do we do when there is an error? Who is to blame when something is missed on the image? The developers of the AI? The hospital? This is a tricky question with no clear answer at this point. We hope to make tools that are at least as good as humans, if not better – but they will not be infallible. We all accept risk in our daily lives, and with more education about the risk-reward case for AI in medical imaging, perhaps we will more readily accept that as well.

So, are we there yet? No, not yet – but we are on our way to a future where artificial intelligence may play a bigger role in medical imaging and diagnostics.

X-ray Imaging and Medical Diagnostics: New Materials and Techniques

X-ray Imaging and Medical Diagnostics: New Materials and Techniques  Dynamic X-ray Imaging: A Second Look, a New Diagnosis

Dynamic X-ray Imaging: A Second Look, a New Diagnosis