Bigger, Faster, More Complicated

Interface Bandwidth in 2015

Today it may be part of the universal human condition. From the grandmother trying to email the right size photo from her smartphone, to the pro photographer hunting around for an SD card reader, and the vision system designer trying to account for high-speed, high resolution area scan sensors, the feeling is the same: “How am I ever going to get all of my images out of this freaking camera?”

The answer is the interface.

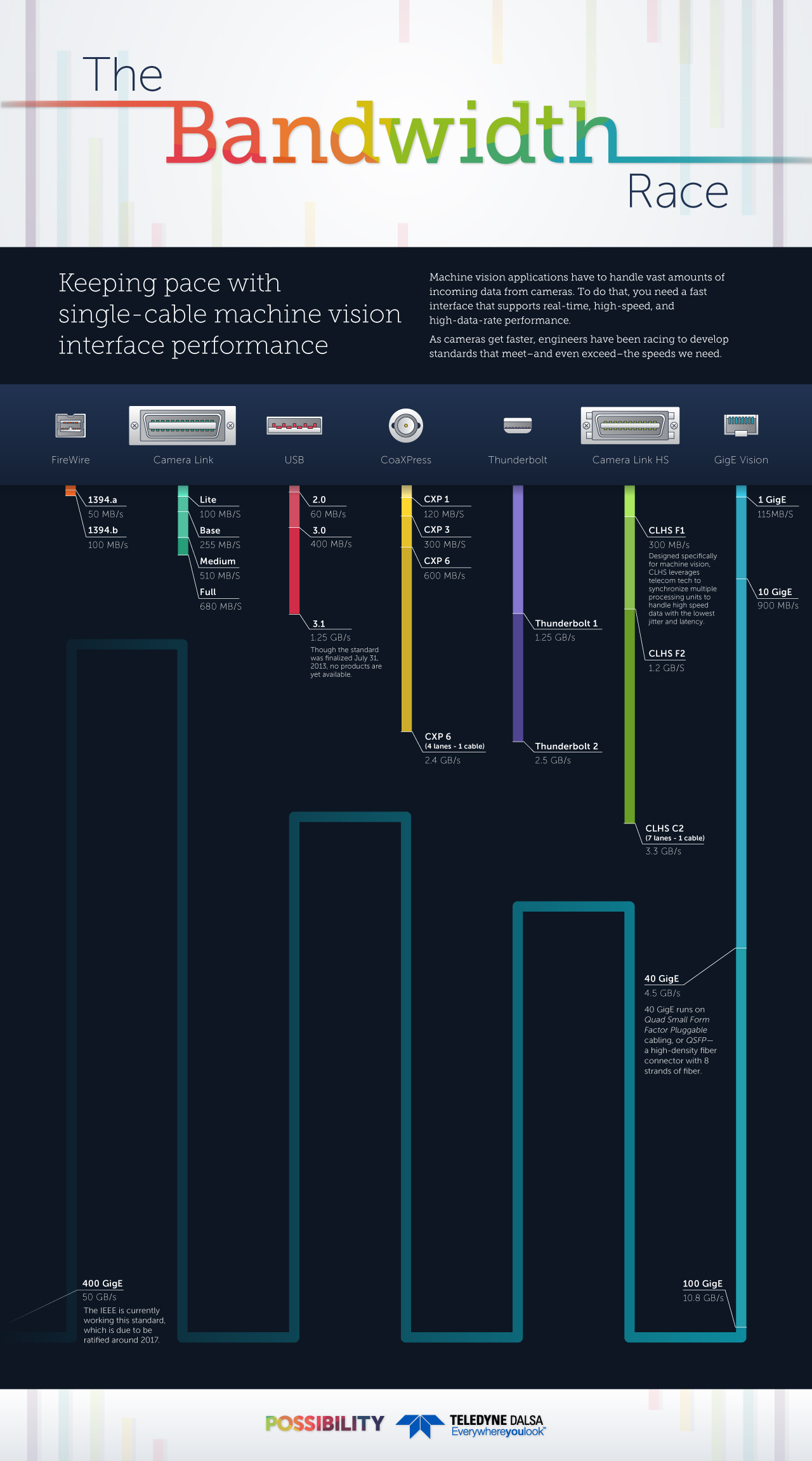

This link between the camera, where image data is created, and the PC that processes the data, is shaped by a balance of priorities. The most important ones include performance, costs, and reliability. For machine vision, this is particularly important. Machine vision applications typically deal with vast amounts of incoming sensor data that the processing computer uses to display, analyze and/or take an action at industrial speeds. To do that, you need a fast interface that supports real-time, high-speed, and high-data-rate performance

An assembly-line robot requires the near-instantaneous transfer of imaging data, from a camera to a processor, to precisely locate and choose parts out of a storage container. Within fractions of a second the vision system must detect and model an object and its surrounding environment, accurately select the part, then determine its next destination. The more precision needed, the higher the camera resolution required.

The faster the assembly line, the faster the camera must be. Both aspects put pressure on the interface.

[Click infographic to expand]

Competing standards: When the solution is part of the problem

There are several driving factors for the proliferation of machine vision camera interface standards, such as cost, cable length, compatibility, and processing overhead but data rate is arguably the main driving force behind the evolution of existing standards to ever-higher performance. This creates a diverse landscape of machine vision interfaces, with everything from small variations to complicated multi-cable compromises, all for the sake of raw speed.

Camera Link HS (CLHS) is a high-performance interface designed specifically for machine vision applications. It can handle the widest range of industry demands, from tiny camera configurations to many megapixels and very high frame rates. CLHS standardizes the connection between cameras and frame grabbers and defines a complete interface, including provisions for data transfer, camera timing, serial communications, and real-time signaling to the camera. Camera Link is a non-packet-based protocol and remains the simplest camera/frame grabber interconnect standard. The trade-off is in cost: Camera Link requires a framegrabber on the PC end, which can significantly raise the price of the entire system.

GigE Vision works with established plug-and-play PC technology, reducing cost and complexity. GigE Vision is compatible with standard Gigabit Ethernet hardware, allowing the networking of multiple cameras and long cable lengths (up to 100 meters long) using inexpensive CAT5e or CAT6 cables. Since GigE is based on commonly adopted technology, there is a widespread support for improvements: upcoming high-speed standards like 40GigE and 100GigE ensure its future.

USB Vision is based on one of the most popular commercial interface standards. It can be found on virtually all PCs, helping keep costs and complexity down. USB is a widely used interface for consumer computer products, but has a relatively low power rating so that it cannot generally support the electrical power requirements of high-speed, high power cameras. Also, the nature of USB (it connects directly to the computer bus instead of being mediated by a framegrabber) takes CPU cycles away from the machine vision software running on the PC. The new USB 3.1 standard is intended to solve many of these shortcomings with higher bandwidth and the ability to transmit up to 100 watts with the right hardware.

CoaXPress A surprise hit over time, CoaXPress has proven to offer a compelling combination of simple and inexpensive cables with high-bandwidth data transmission, power, and triggering capabilities. While it is nearing its bandwidth ceiling, it retains a devoted following based on some unique qualities. One of the great benefits of CoaXPress is its ability to use rotary joints and slip rings, which are typical in defense, robotics, surveillance and broadcast applications where continuous panning is needed.

A Glimpse of the Future of Bandwidth

Smaller objects, larger fields of view, and faster imaging rates have driven market demand for higher-resolution and higher-frame-rate systems. In fact, today’s sensors are already capable of generating image data at 1.3 GB/s and pushing hard towards rates beyond 5 GB/s. At these rates, most current system interconnects are becoming bottlenecks between these high-performance sensors and the PC. To meet this challenge, significant advances in GigE are already planned and Intel is taking things even further with its MXC standard.

It’s still unclear which technologies will find their way into the machine vision industry. Future protocols would also need to provide an efficient method to support camera controls required by the most demanding machine vision acquisition applications. These include reliable and low-jitter camera trigger, reliable and low-jitter strobe synchronization, reliable and time-accurate camera exposure control, and reliable line- and frame-rate control.

New applications are already pushing data rates…and dimensions. At its developer conference in November 2014, Samsung announced “Project Beyond,” a camera built to capture 3D footage for use with Samsung’s Gear VR headset. The device has 16 individual high-definition cameras arranged as a disc.

Together, they capture a gigapixel — roughly 3.1 gigabytes of data — every second.

Duke University researchers have been experimenting with their 1.5 gigapixel AWARE 10 camera since 2013, and their upcoming 4 gigapixel AWARE-10 is now being completed and refined. While they are continuing research and work towards 10 gigapixel and 50 gigapixel cameras, these multi-cameras are limited not by bandwidth, but optics and heat at the sensor head.

Figuring out how to manage super speed data rates over networks will also need to be solved. As CERN is discovering, you can accumulate massive amounts of data, but it’s of little use to you if you can’t share it. To deal with these kinds of challenges, Bell Labs has been exploring multiple techniques, culminating in a recent world record connection speed of 10Gbps over copper wires with its XG.fast technology. These speeds were possible by using a large 500MHz frequency range. Since these higher frequencies attenuate very quickly, XG.fast could only go 30 meters (100 feet).

Reality check

Despite these impressive gains, they are not likely to stop any time soon. Researchers at Duke University have also explored the fundamental limits of pixel count. According to them, the limits of digital imaging lay far beyond our current capabilities. By assuming 1K photons per pixel, this allows about 10 million pixels split between temporal and spectral degrees of freedom. They also assume 10K fps, 100 spectral channels and 10 focal range bins, suggesting 100 petapixels per second as a “reasonable” physical limit.

By these calculations, that means a visual reality could be captured by a 100 petapixel camera, running 10,000FPS at 24-bit color depth, generating 3,000 Exabytes/s.

Sadly, a compatible interface and cable are not yet available. Look for Cameralink 100HS, planned for testing in 2056.

Master of Cables, Part One: Getting Entangled in Machine Vision

Master of Cables, Part One: Getting Entangled in Machine Vision  Master of Cables, Part Two: Untangling the Future

Master of Cables, Part Two: Untangling the Future