A Close Look at Vision Guided Robotics (VGR)

How complex automation challenges are being met through the marriage of robotics and advanced machine vision.

We’ve all seen videos of robots rapidly assembling cars with little or no human intervention. Industrial robots like these have cut costs and increased productivity in virtually every manufacturing sector, but they have a major shortcoming—they can’t “see.” Programmed to repeat exactly the same motions over and over again, they are unable to detect and maneuver objects of different shapes, sizes, and colors, or objects that touch and overlap. So if a product changes or is added to the production line, the robots must be reprogrammed. And if product components are delivered to the line by traditional hoppers and shake tables, bowl feeders must be retooled.

Coping with chaos

Now a new generation of robots guided by advanced machine vision is taking robots far beyond the repetitive tasks typically found in mass production. Fuelled by smaller, more powerful and less expensive cameras and other vision sensors, increasingly sophisticated robotic algorithms and processors with machine vision-specific hardware accelerators, these Vision Guided Robot (VGR) systems are rapidly transforming manufacturing and fulfillment processes.

VGR makes robots highly adaptable and much easier to implement for industries in which new products are introduced frequently and production runs are short—including medical device and pharmaceutical manufacturing, food packaging, agricultural applications, life sciences and more. [1] For example, a leading global automotive manufacturer operating a high-volume plant in China uses a GEVA 1000 vision system from Teledyne DALSA to ensure robots on two assembly lines securely grip parts to place them on a rapidly moving conveyor. In the past, the parts were lifted and placed manually. Automation has increased productivity by about six times.

Systems like this lend themselves to environments where clutter is unavoidable or too expensive to eliminate, or line speeds are too fast for human workers. Advanced systems are even addressing what may be the most challenging VGR application—picking randomly distributed objects of varying sizes, shapes and weights from bins in factories and distribution centers such as Amazon’s network of massive automated fulfillment centers.

Taking On Random Bin Picking

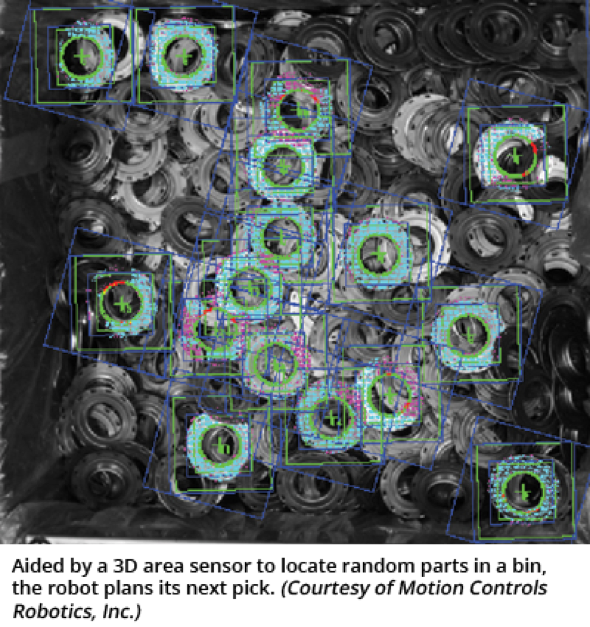

Robotic random bin picking is especially challenging because the VGR system must locate and pick a specific part in a chaotic environment. As the robot removes parts from the bin, other parts constantly shift position and orientation. The system must recognize the correct objects, determine in which order to pick them up, and calculate how to grip, lift and place them without colliding with other objects or bin walls. This requires a combination of high-performance machine vision hardware, sophisticated software and enough computing power to process large amounts of visual data in real time.

Machine vision hardware can be anything from a compact smart camera, (Teledyne DALSA’s BOA Spot) with an integrated vision processor, to complex laser and infrared sensors and high-resolution, high-speed cameras.

And what about 3D Vision?

Often VGR systems use more than one type of sensor to build 3D images. For example, a robot with a 3D area sensor locates and picks randomly positioned parts in a bin. Then a 2D camera detects the orientation of each part on the fly, so that the robot can correctly place them on a conveyor.

By combining laser 3D Time-of-Flight (ToF) scanning and snapshot 3D image capture, some VGR systems gain the resolution to work with a wider spectrum of objects than with a scanning system alone, but without needing to move the camera as with traditional snapshot camera systems. ToF scanning, which measures the time it takes light from a laser to travel between the camera and an object’s surfaces to determine its depth, has the advantage of working in any lighting condition.

Structured-light 3D systems, such as Microsoft’s Kinect sensor for video gaming, cast an invisible infrared light pattern on an object, then generate a 3D depth image by using a 2D camera to detect the distortions of that light pattern. This process can be used for 3D mapping of multiple objects in a sorting bin.

Robust hardware and algorithms

These advanced vision systems are able to process large amounts of data by using hardware accelerators such as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs). This gives them the capability to handle thousands of SKUs on production lines and in order fulfillment applications.

A critical component of advanced VGR systems is algorithms that prevent the robot and its end-of-arm gripping tool from colliding with the sides of the bin or other objects. This interference avoidance software must be exceptionally robust because every pick from the bin requires a different path plan, and parts are often intertwined.

Looking ahead

Increasingly, VGR software, including an open-source Robot Operating System (ROS), agnostic to robots and sensors, will make it faster and easier for robot integrators to deliver VGR systems and to integrate new, more powerful sensors as they become available.

At the same time, machine vision and robotics vendors are closely collaborating to make VGR more accessible. For example, machine vision vendors have developed tools that make it easier for engineers to model and optimize sensors for a robotic cell. They are also developing Windows-based VGR systems that are easy for end customers to use.

Thanks to innovations like these, VGR use is now approaching 50% of robotics in consumer electronics (above the circuit-board level) and other light assembly in Asia. And as random bin picking technology fast becomes a flexible, easy to understand and interchangeable commodity, it’s within the reach of small and medium-sized companies looking to reduce manual intervention, improve safety and quality and increase productivity. [2f]

A New Way: Automotive Fabric Inspection

A New Way: Automotive Fabric Inspection  Add Vision to Robots — See the Difference

Add Vision to Robots — See the Difference