Facial Recognition, Part III: Problems in the UK

The raging debate about UK police use of widespread automated facial recognition covers security, privacy, and legal concerns while an even more confounding questions looms: Does it even work?

We’ve covered different aspects of facial recognition, from why it’s important, to the difficulties introduced by biased data sets. And we’ve seen that imaging solutions are popping up everywhere in cities, from the streets to the street lights. And now facial recognition software is being paired with CCTV cameras in public and private places. As the hardware gets cheaper and more powerful, and the algorithms underlying recognition get more sophisticated it will be easier to implement in more places. What’s at stake?

Other than perhaps China, no other country has committed to the automated visual monitoring of its populace. Estimates of the number of CCTVs in the UK vary between 4 and 6 million cameras, potentially enough for one camera for every ten residents. The country has been using those cameras for facial recognition operations at public events, looking for criminals and troublemakers.

The UK police now hold a database of more than 20 million images for the purpose of facial recognition. Driving the adoption of this technology is general public support for security measures, backed by warnings coming from the UK security community: Andrew Parker, the director general of MI5, said that “UK is facing its most severe terror threat ever.”

The problems begin with the fact that automated facial recognition aimed at presumably innocent people on the street might not be legal.

In 2012, a High Court ruled that it was unlawfulfor the police to hold all of those 20 million images, as many of them are of innocent people. But the UK government stepped in and said that the police don’t need to delete those images. The police say that the images would be too expensive to delete. Parliament has yet to rule on much of anything regarding the oversight or requirements of facial recognition as it’s being deployed. Civil rights group Libertyargued there was “no legal basis” for the use of the real-time system. Another rights group, Big Brother Watch, also points out parliament has never scrutinized the technology’s use in public places.

While both sides argue the legality of these systems, they are still being employed in pilot tests across the country. These tests have revealed another troubling factor: these systems really don’t work. At all. In 2017, London’s Metropolitan Police Department used facial recognition at the 2017 Notting Hill carnival, where the system was wrong 98% of the time, falsely telling officers on 102 times, that it had spotted a suspect.

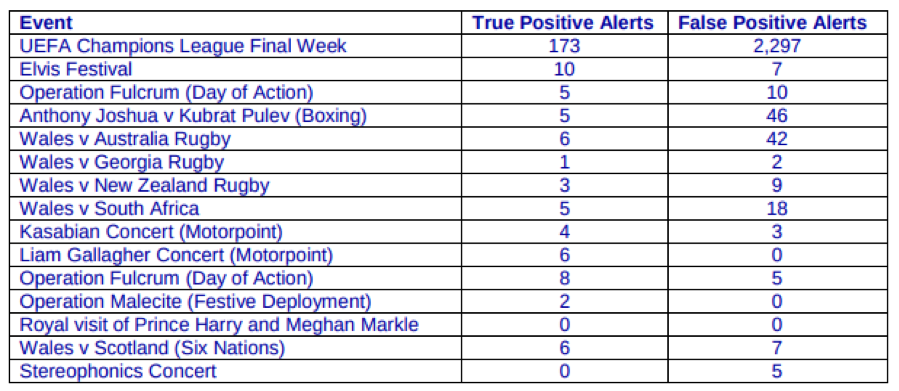

To the east, the South Wales police department was given £2.1m to test facial recognition technology at public events. The results were published in response to a Freedom of Information request:

So that makes this better than the Met’s system, making false positives only 91% of the time! They must be thrilled. On 31 occasions police followed up the system saying it had spotted people of concern, only to find they had in fact stopped innocent people and the identifications were false. While the South Wales Police have claimed many arrestswith the help of the system, they also mistakenly arrested the wrong person. Interestingly, that false arrest was due to the police database’s information being up to date. Having clean and up-to-date data may be even more important than fancy new technology. In 2015, police in Leicestershire tested facial recognition technology at the Download music festival where 90,000 people attended. The system did not catch any criminals at the event.

How could this happen in a system actually being employed by professional law enforcement? A spokesperson for the police department blamed the low quality of images in its database. The company behind the tech, NEC, insists this is an end user problem. NEC told ZDNet last year that large watchlists lead to a high number of false positives.

CCTV camera technology: a series of macro-scale compromises

CCTV cameras are being used for a steadily growing number of applications. While it’s possible to customize cameras to specific applications, the huge scale of the industry means that more often than not, configuration decisions are often made without considering specific task requirements, such as the video quality needed for reliable person identification.

The constraints of recording introduce further compromises. Digital CCTV surveillance requires processing, storage and data transmission, all of which come at a cost. Most digital CCTV systems by default record video at Common Image Format (CIF) resolution (352×288). However, many can be set to lower rates i.e. Quarter CIF (QCIF) resolution (176×144).

Owners of surveillance systems will often try to reduce storage requirements by reducing resolution and/or framerate, or increasing data compression. There are currently no tested guidelines available for digital CCTV users on video quality and storage, to ensure that they are set up effectively for the intended tasks and CCTV users. Which means that some solution simply won’t work. One study, “Can we ID from CCTV: image quality in digital CCTV and face identification performance,” from the College of London, showed that video quality is absolutely crucial for the success of both automated or people-driven recognition systems. Correct identifications decreased by close to 20% as video bandwidth fell from 92Kps to 32 Kbps.

The video quality is often further exacerbated by the fact that CCTV video systems are often poorly set up. Lighting, focus, and distance are often not accounted for. The result is potentially unusable video for key security observation tasks such as monitoring, detection, and recognition.

Finding solutions, balance, and clear results

Critics claim that these systems are wasting millions of pounds and violating civil rights. They may not be wrong. But with the pressures that police forces in the UK face, they seem to be committed to keeping the systems and implementing more. While the technology will certainly improve, and the long view may be the correct one, there are risks to ignoring critics today. Perhaps the most significant is a loss of legitimacy if the police and government are seen as too controlling and oppressive. You can be technically right, but still miss a more relevant truth.

The South Wales Police, in its privacy assessment of the technology, says that a “significant advantage” of its facial recognition system is that that no “co-operation” is required from a person. This is more than a little ironic, considering the British police force has operated under a unique philosophy of “policing by consent” since at least the early 1800s, where authority comes almost exclusively from public co-operation with the police. 100% surveillance may go against the police force’s core values.

It also may make them a laughingstock. In 2007 the city of London ran a series of posters around the city promoting the value of their (then relatively new) CCTV network. The posters’ disembodied eyes and the slogan ‘Secure Beneath the Watchful Eyes’ drew direct comparisons to George Orwell’s novel 1984 and its totalitarian policing. The short-lived poster might actually have been based on good science. Melissa Bateson, Daniel Nettle, and Gilbert Roberts published research at Newcastle University that suggested that pictures of eyes increased honesty in people observed in a normal work environment: A picture of a pair of eyes near a coffee machine honesty box tripled the amount of money collected. The idea was that eyes remind us that we could be being watched, and, either consciously or unconsciously, we feel the need to be more honest as a result.

But whether it worked or not, it drew too much criticism to be allowed to continue. Eventually these systems may be good enough, and by then hopefully the oversight and political framework will be ready. Hopefully the UK government will proceed in a way that gives facial recognition a chance to help.

But whether it worked or not, it drew too much criticism to be allowed to continue. Eventually these systems may be good enough, and by then hopefully the oversight and political framework will be ready. Hopefully the UK government will proceed in a way that gives facial recognition a chance to help.

There’s reason to hope. The UK’s regulators for biometric data and surveillance cameras have both called for stronger rules around the use of automated facial recognition technology. In his 2017 annual report, Tony Porter the Surveillance Camera Commissioner said automatic facial recognition is “fascinating” but it can be used for “intrusive capabilities” and have “crass” applications:

“The public will be more amenable to surveillance when there is justification, legitimacy and proportionality to its intent.”

Facial Recognition: How to Find a Face

Facial Recognition: How to Find a Face  Facial Recognition, Part II: Processing and Bias

Facial Recognition, Part II: Processing and Bias