Future Tech Review #3: What are we feeding our AI systems and what’s the impact?

Artificial intelligence is growing in popularity and use. Its sophistication can be attributed in large part to the brains behind it and the information we pass on.

We’ve done it. We’ve really done it. We’ve made our most valuable, intangible asset transferable. We’ve given machines the power to think and act like humans.

Artificial intelligence (AI) is a modern day fascination. And somewhat surprisingly, according to AI investor and CEO of Tesla and SpaceX Elon Musk, it could be a modern day disaster. Speaking to students from Massachusetts Institute of Technology (MIT) in an interview at the AeroAstro Centennial Symposium, Musk cautions its widespread use.

“I think we should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it’s probably that. So we need to be very careful,” said Musk.

Yes, giving “life” to robots could soon turn society into a real-life Matrix situation but others suggest it might be what we feed AI that’s the most concerning of all. Not only does AI require a vast amount of data to learn about the word, but it requires a diverse range of information. And most importantly, that information must be objective.

Racist Robots

Bias – a quintessential human quality – is not something you’d imagine a robot having. In fact, robots are often used for their express ability to remain impartial. However, as the old adage goes – monkey say, monkey do. Thanks to new machine learning algorithms and techniques, programs such as Google Translate are getting much better at interpreting and mimicking the nuances of human language – and as a result have started picking up on the subtle racial and gender biases that can be found in the patterns of human language use. Are these robots racist? Well, Joanna Bryson, a computer scientist at the University of Bath countered this sentiment by saying: “A lot of people are saying this is showing that AI is prejudiced. No. This is showing we’re prejudiced and that AI is learning it.”

The real safety question, if you want to call it that, is that if we give these systems biased data, they will be biased. – John Giannandrea, SVP of Engineering and Chief of AI at Google

Biased Algorithms Are Everywhere, and No One Seems to Care

Disappointing, yet not surprising, the tech powerhouses behind AI as well as government officials don’t seem to care a whole lot about the potential negative effects of the pre-judiciary data we’re passing on to our robotic counterparts. As MIT Technology Review reporter Will Knight notes, there is very little regulation around machine learning application and its consequences. “Financial and technology companies use all sorts of mathematical models and aren’t transparent about how they operate,” says Knight. Most of the time, these businesses are more interested in the bottom line than the risk of biased algorithms. In July, a group of researchers along with the American Civil Liberties Union launched AI Now Institute, to examine the social implications of AI. With little support coming from up top, it’s groups like these that will have to step up and step in to ensure AI innovators are held to the same standards as other industry leaders.

Artificial Intelligence’s Fair Use Crisis

Beyond black and white decision-making, AI may soon make its way into the creative world. In the Columbia Journal of Law and the Arts, author Benjamin L.W. Sobel’s dives deep into this very real possibility. By ingesting different art forms, these programs could, for instance, “write a natural prose, compose music, or generate movies” – all while smoking a pipe and wearing a posh ascot. But Sobel highlights that there’s a notable problem with machine learning from copyrighted material. The current Fair Use doctrine in the U.S. will do one of two things: either prohibit further innovation in this realm because of copyright laws, or discredit or divert rightful earnings from those who actually made the art in the first place. The question is whether machine learning applications fall under this specific legislative mandate. Nevertheless, the lines get increasingly blurry as AI crosses over to new industries.

Some Advice for Journalists Writing About Artificial Intelligence

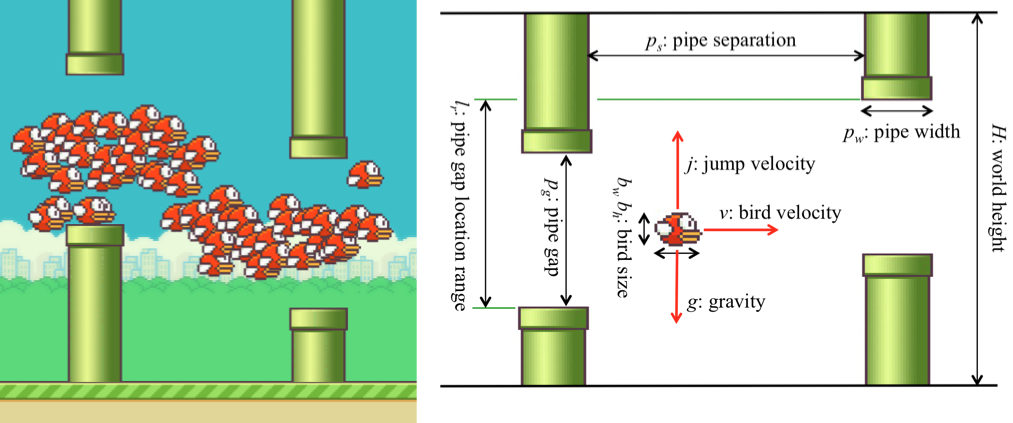

It sometimes takes an engineer to separate news from actual reality. Julian Togelius, an AI researcher and Associate Professor at NYU’s Tandon School of Engineering, has some brilliant recommendations on how to write (and read) about artificial intelligence. His tips range from the always-wise “Don’t believe the hype” to advice on how to even refer to AI and how and why you should read Alan Turing’s 1950 paper on Computing Machinery and Intelligence. Julian is also Co-director of the NYU Game Innovation Lab where you can literally play with his research.

So whether you’re in the business of developing technology for AI, an enthusiastic supporter of AI, or have a passing interest in AI, remember that, like humans, AI is a product of its environment – to a degree. It’s that old nature vs. nurture debate. Neither one is the answer, but both will have an impact and interact in surprising ways. As the technology advances, the relationship between what AI innovators create and the result is going to be more weird, unexpected, and genuine than anything we’ve seen before.

Add Vision to Robots — See the Difference

Add Vision to Robots — See the Difference  Industrial Automation: Vision guided robot picks and places bobbins

Industrial Automation: Vision guided robot picks and places bobbins