The Four Basics of Machine Vision Applications

“All I need to do is…” – as a machine vision applications engineer, the sound of those words puts me in “Danger Will Robinson!” mode.

If a prospective customer says that, there’s a chance he hasn’t thought through the entire application; this is especially true if the customer is new to machine vision.” All I need to do is make a couple of measurements on a shiny metal part and kick the bad parts off the assembly line.” Can the shape and orientation of the metal part cause reflectance? “All I need to do is make sure the already-filled bottle of soda doesn’t contain any foreign material.” Will the bottle always be oriented so that its contents can be seen by the camera, or can it rotate such that its label gets in the way? “All I need to do is read a barcode.” And do what with the code?

There are four basic steps in a machine vision application. Each one must be carefully considered to avoid disappointment, frustration and heartbreak for the customer and nasty surprises for the application developer.

- Acquire an image

- Extract information from the image

- Analyze the information

- Communicate the results to the outside world

1. Acquire an Image

We urge customers to spend the time and money getting the right camera, lighting and lens to acquire the best image possible of real parts under real working conditions. Image preprocessing can sometimes be used to turn a not-great image into a good-enough image, but it’s much more difficult to turn a terrible image into a usable image. As the saying goes, you can’t make a silk purse out of a sow’s ear. But why you’d want to is beyond me.

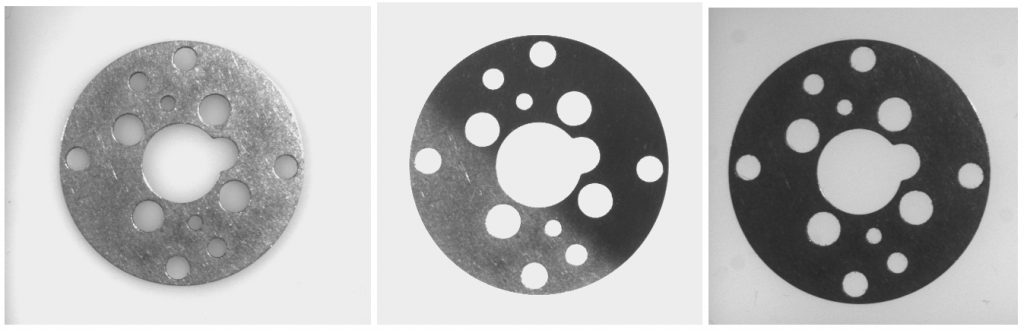

I’d’ like to emphasize a phrase in the previous paragraph: “Real parts under real working conditions.” A colleague recently spent several weeks developing an application to distinguish among four similar stamped metal parts for a customer outside the United States. (Remote application development can be a challenge!) The differences among the parts were small but detectable in the 100+ images the customer had acquired using the lighting and lens he had configured himself and sent to my colleague. (The customer was knowledgeable about machine vision, having formerly used a certain yellow product.) A few weeks later, the customer sent a batch of images in which all of the parts were slightly blurry. When my colleague asked why this was, the customer explained that the first images were taken while the parts were stationary, and the new ones, while the parts were moving down the conveyor belt, as they would be in the final deployment. The blurriness made distinguishing the small differences impossible. (The customer is investigating how to remove the blurriness, most likely with a shorter exposure time).

2. Extract information from the image

“I can see the feature/difference/flaw, why can’t your software?” is a not-uncommon question.

We humans have had tens of thousands of years to hone our pattern-matching skills and develop our neural networks. Presented with an image, even one we’ve never seen before, and told to perform a visual task (“Find the widget that’s different from all the other widgets”), we’re good at ignoring the background noise and zeroing in on what’s important. A computer is, by comparison, stupid. (Although I’m suspicious of my smartphone – I think it’s been taking my car for joyrides at night while I’m sleeping.)

A machine vision system must be programmed to explicitly measure the size of an object, locate a feature with a high degree of reliability, or determine whether a color is what it should be. A typical machine vision system is not configured to handle unexpected changes to the scene – deterioration of focus, change of scale of the objects to be measured, a loading dock bay door being opened and flooding the setup with outside light. (Yes, that was a real case!)

Back to my colleague and the stamped metal parts… Even before the blurriness problem, the customer had sent a second batch of images of what he considered the same parts. But in fact, they had features that were not present in the first batch of images, and these features interfered with the software tools my colleague had successfully applied to the original images. This made it necessary to modify the software tools to ignore those features. The customer didn’t understand why these new images had caused a problem since the new features had “nothing to do” with the features that were being analyzed to distinguish the parts.

There’s a lot of excitement these days about the coming wave of artificial intelligence – this is at least the 3rd time since I graduated college more years ago than I care to think about. At VISION 2016 in Stuttgart, a few companies were showcasing their “deep learning” software. It was impressive, but our customers are trying to solve problems in industrial environments, usually within a few hundred milliseconds, for as little money as possible. It’s not clear yet how easily or economically deep learning will port to the factory floor.

3. Analyze the information

This step is sometimes optional, for example when the information extracted from the image in Step 2 just has to be logged to a file. But more often, the information has to be analyzed to make a pass/fail decision. In most applications this is quantifiable and straightforward: If the part doesn’t meet the dimensional criteria, or if the label is crooked, or if there’s a mouse corpse in the bottle, it’s a reject. Other times, the pass/fail criteria are fuzzier. What’s the largest scratch allowed on a smartphone case before the case is a reject? Is a 0.05 mm straight-line scratch worse than a 0.05 mm spiral scratch? Customers sometimes have difficulty providing quantitative answers. Two humans who have performed the same visual inspection for years may accept or reject the same part, because their somewhat subjective ideas of good and bad may not be quite the same.

Years ago I was sent a box of 40 metal cylinders to determine whether our software could distinguish the bad from the good. The cylinders bore scratches and pits of various sizes but were not marked to indicate which were good and which were bad. I called the customer to ask him which were which. “Oh, I forgot to label them,” he answered. “Can’t your software figure it out?”

4. Communicate the results to the outside world

By “outside world”, I mean beyond the analysis part of the application. Our products generally don’t work in isolation; there is usually other hardware and software with which they must interact. We provide interfaces to a lot of this – TCP/IP and serial communication, PLC protocols, etc. but we can’t vouch for the reliability of another company’s product. I’m not saying it’s never our fault when there’s a communication problem – we’re good, but we are not perfect – but there have been more than a few occasions when, after beating our heads against the wall trying to figure out why our software can’t pass data to a PLC in a factory in The Middle of Nowhere, North Dakota – where we can’t visit, and can’t access remotely — it’s turned out that the two products weren’t configured properly to communicate with each other.

A critical metric for the vast majority of machine vision applications is processing time – the number of parts that can be acquired and analyzed per second or per minute. For many applications, the selling point for machine vision is not its ability to perform a task that a human can’t, but rather to perform it many times a second for 24 hours without its mind wandering off into… Oooh, look, shiny object!

Where was I?

As Alexander Pope wrote, “A little learning is a dangerous thing.” And developing a machine vision solution presents challenges that require learning from both sides of the equation – from the application developer and from the customer side. A good system result can only occur when both parties have a good understanding of the challenge of the problem and the desired result and that, my friend, takes a certain amount of dialogue. At the end of the day, my goal is to ensure an understanding is in place for the delivery and continued operation of a stable, reliable system.

Vision Systems – What you need to know

Vision Systems – What you need to know  A How-To On Selecting the Right Machine Vision Camera for Your Application

A How-To On Selecting the Right Machine Vision Camera for Your Application