Dynamic X-ray Imaging: A Second Look, a New Diagnosis

As the value of medical X-rays and 3D imaging evolves, the value of image intensifiers gets a bit fuzzy.

Bodies are made to move. The invention of x-ray imaging in 1895 was a huge breakthrough for many industries, allowing them to see what was invisible. But in the medical field, it’s been dynamic imaging that has really shown doctors the important stuff. What happens and how things work are vital to knowing how our bodies work (and sometimes don’t).

Dynamic real-time imaging is the way to best understand them. Both medical care and research benefitted greatly, including heart imaging, functions of the gastrointestinal tract and arthritis. Usefulness and usability ensured that the technology spread.

There were some…drawbacks. The image was so faint that physicians could only examine x-ray images in the dark. They would need to remain in a darkened room for 15 minutes before their eyes adjusted and the x-ray image could be viewed properly on the scintillating screen. If the physician needed to leave the darkened room for any reason, the process would have to start all over again.

These factors delayed and protracted medical examinations, which was unpleasant for both patients and doctors. Even more unpleasant was the significant radiation exposure for everyone involved. Still, for many years, these scintillating screens were widely used, well understood (excluding perhaps the long-term effects of radiation exposure), and as far as most people were concerned, “good enough.”

Workflow Drives Adoption: Enter the Image Intensifier

A technology is only “good enough” until it suddenly isn’t. The introduction of image intensifiers in the early 1950s meant that scintillating screens were quickly doomed. With hundreds of times the brightness of previous x-ray images and a safer, easier workflow, it was no contest.

The image intensifier is a simple enough device if you are familiar with physics. For everyone else, the very visual analogy is that of the ‘CRT, backwards.’ At its simplest, the image intensifier is a vacuum tube with a scintillator input window, a photocathode, electro-static lens, a luminescent screen and an output window. It receives X-rays and converts them to useful, visible light.

Today, image capture for these devices is usually performed using CCD image sensors. Their output can then be presented in real-time, on-screen for analysis. Compared to those glowing cancer screens, image intensifiers were much easier to use.

Accepting Compromises

Because there was no other viable option, medical professionals generally became accustomed to the disadvantages of image intensifiers. Sure they were delicate, being sensitive to electric and magnetic fields. Radio frequencies also interfered with their operation. The scintillators have a short lifetime, depending on how they are used. Contrast starts to fall off sharply if the device is exposed to a lot of x-rays. This can happen when the patient moves out of the way of the x-ray beam, exposing the image intensifier to the full brunt of the x-rays and saturating the device. As we mentioned, bodies move. As they do, so the performance of image intensifiers degrades.

Blooming artifacts also make the devices harder to use, and it takes time to adjust the exposure to get useful dynamic range.

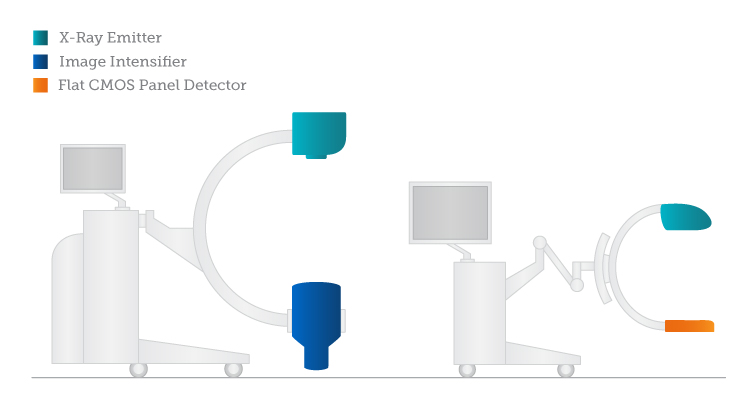

While image intensifiers are delicate, hospitals and emergency response scenes are not typically delicate places. Not only due to their delicacy and sensitivity to the environment, image intensifiers require serious compromises in terms of physical size: each is the shape and size of a small water barrel or at least a water bucket (hopefully you aren’t imaging a hip fracture).

New Reasons to Reject Compromise

While it was much better than what came before, no technology is perfect—or permanent. Manufacturers have continually revised designs to minimize flaws, and today, some sixty years later, image intensifiers remain a proven, reliable, and relatively cheap technology. All of their problems are known and workarounds exist for most of them. But there are limits. While image intensifiers remain after six decades, much of medical practice has moved on.

One key example has been the rise of minimally-invasive surgery. Many orthopedic surgeries, such as knee and hip replacements, can now be performed via very small incisions. The challenge is to be able to see what you are doing. The answer is consistent x-ray imaging over the duration of the surgery. This makes accurate imaging at low doses more necessary. The x-ray imager may need to be on for the 30-40 minutes of the surgery to ensure success. Some unique cases could call for up to hours, with the procedure would being interrupted to allow the equipment to cool down. Neither patients nor doctors want to sign up for exposure which is even a tiniest bit above what is absolute minimum and image intensifiers have their limits too.

As much as it’s a health concern, it’s also a professional one: in some countries, surgeons much retire after reaching their lifetime limit of radiation. The more experienced a surgeon is the more likely she or he will be retired.

A False Hope for Digital Sensors

The initial attempt to replace image intensifiers included an attempt to recreate innovation by once again adapting television technologies. Researchers found that the same TFT panel technology that gave people thin TVs could be used to detect x-rays. Their compact size was a big advantage in mobile C-arms—x-ray imaging devices used for emergency response. On-site applications greatly favor more compact digital devices.

While TFT-based Flat Panel Detectors were better than image intensifiers in many ways, they underperformed at low dose. They were actually much worse. While companies have successfully added ultrasound and powerful x-ray tubes, and software to deliver improvements, FPDs still cannot perform at low doses.

Spoiler Alert: 3D Imaging

When it comes to the future of x-ray imaging, the true nail in the coffin for image intensifiers and FPDs is—and continues to be—patient dose. Nowhere is this more apparent than 3D imaging or computed tomography. Simply put, the amount of x-ray dose required for the multiple images that add up to a strong tomographic image using older technologies is too high. And while low dose performance is a bit niche today, the development path forward is clear: one day every X-ray examination will be considered in 3D first.

Even if dose requirements for image intensifiers could be tolerated, the round images that image intensifiers produce are very inefficient for 3D imaging. Because of strong geometrical distortions, other image artifacts and uneven resolution not the full area is suitable for 3D reconstruction. XX century technology of image intensifiers ended up not being suitable for XXI century performance requirements.

For Yet Another Industry, CMOS Looks Really Good

Enter CMOS sensors, the heroes of so many other industries, and present at the heart of most electronic devices, from wristwatches, to automobiles to mobile phones and computers. CMOS offers numerous advantages. CMOS is very good at low dose performance, with virtually no added noise. This comes from better detector quantum efficiency than either image intensifiers or FPDs. CMOS imagers can deliver equivalent image quality at lower doses or better image quality at standard doses – allowing for the diagnostics of medical anomalies at an earlier stage, significantly increasing the probability of early intervention, patient recovery and reduced treatment costs.

Environmentally Friendly: Compared to FPDs, CMOS is very low power. It also doesn’t require active cooling, which is a significant advantage when creating a sterile field where everything needs to be wrapped and protected in multiple layers of plastic. In contrast, a wrapped dynamic FPD should be liquid-cooled to operate properly, with a separate (expensive and bulky), heat extractor.

Rad- and Time-Hardness: Similar to the organic structures of DNA, all image sensor structures suffer damage from X-ray photons, which results in increased noise and reduced sensitivity. This will become increasingly apparent throughout the lifetime of the detector. CMOS offers by more than an order of magnitude better longevity than image intensifiers. In a long run, the initially more expensive CMOS x-ray detector is actually saving money to hospital’s X-ray department.

Waiting to Catch Up

Transitions like this are difficult, as no technology transition is “free.” CMOS requires adjustment and training. Specialized software is needed to create 3D images. The medical industry also remains very pragmatic. It may not matter how much better a given technology performs, it matters how it performs for the price.

Still the way forward seems clear. For decades, hospital personnel have found ways to finally ditch the old elements of imaging – film, chemicals, image plates, and bulky image intensifiers. Today they use CT, PET, SPECT, MRI and ultrasound—technologies that are increasingly modern, digital, and integrated into a larger medical center data network. More advanced diagnostic and intra-operative imaging leads to better outcomes. Low-dose and 3D imaging represent the future, and high-performance x-ray systems will definitely be needed to meet those demands.

With increasingly longer life expectancies and a more demanding (and educated) population, the opportunities for improved diagnosis, increased patient comfort, lower dose and 3D imaging will make it very difficult for image intensifiers to stay with the status quo.

Imaging with the Chandra X-ray Observatory: Radiation, CCD Arrays, Workflows, and Space Cats

Imaging with the Chandra X-ray Observatory: Radiation, CCD Arrays, Workflows, and Space Cats  Five CMOS Camera Developments to Watch in 2014

Five CMOS Camera Developments to Watch in 2014