Night-sight: Competing technologies for the vision systems in autonomous vehicles

Infrared, lidar, and time-of-flight imaging offer hope in solving one of the industry's toughest challenges

It’s nighttime. You’re driving. A Chuck Berry song enters your mind: New Jersey Turnpike in the wee, wee hours / Rolling slowly because of drizzling showers.

But, are you rolling slowly enough?

At night, the average vehicle high beams can illuminate about 400 feet, far less in inclement weather. And, if you’re traveling at a conservative 55 mph—80 feet per second—that means it will take you about 170 feet to stop once you apply your brakes. But, the average driver will travel 120 feet before the brakes are applied. There’s not much margin for error, and if you’re driving less conservatively, you better hope that deer moves before you reach it.

But, perhaps you’re driving a car of more recent vintage. A nice one. Something German, maybe. It has night vision, so you think you’re safe. Are you?

Active or passive?

Night vision made its first appearance in regular production automobiles on the 2000 Cadillac DeVille. At that time, the technology used was a relatively primitive version of thermal infrared, produced by Raytheon Technologies, the century-old, Massachusetts-based defence contractor.

“It wasn’t true night vision, which amplifies available light,” wrote Nick Stockton for SPIE, a non-profit dedicated to advancing the scientific research and engineering applications of optics and photonics. “Using a grill-mounted, 3-inch passive sensor, Cadillac’s system picked up thermal imagery from the oncoming road, then transmitted it to a grayscale display in the car’s center dashboard. Cooler objects were darker, while warm stuff, like bodies, showed bright white.”

The system General Motors employed was what’s known as passive infrared. These have the advantage of a range of up to 1,000 feet, but they work poorly in warm weather and show drivers a grainy, low-resolution image.

In 2002, Toyota entered the field with an active infrared system it packaged with its high-end Land Cruiser Cygnus and Lexus LX470. Its “Night View” combined a near-infrared projector beam with a charge-coupled device camera that captured reflected radiation. An onboard computer processed the signal and projected a black-and-white image onto the windshield.

While active infrared provides drivers with images that are higher resolution than passive systems, and is better for capturing inanimate objects like road debris or stalled vehicles, they don’t work as well in fog or rain, and have a maximum range of 650 feet.

GM dropped the Raytheon night vision system in 2004. It seems, even to Cadillac buyers, the $3,000 price tag was too steep for the bulky sensor and its limited precision. While BMW added a passive system to its luxury 7-Series line in 2005 and its German competitors—Audi and Mercedes-Benz—entered the game soon after, night vision technology showed no sign of spreading to mainstream motoring.

Interestingly, Volvo—the automotive manufacturer most closely associated with safe driving—watched from the sidelines. After introducing a version of night vision on its vaunted Safety Concept Car in 2001, the carmaker focused on improvements to more traditional lighting and radar-related braking for what it calls “city safety.”

Meanwhile, Honda/Acura, which introduced a system around the same time as the German manufacturers, had stepped back, and Night View had quietly disappeared from the Lexus list of buyer options in 2013.

It seemed that night vision wasn’t ready to take its place alongside safety features like seat belts, air bags, and “crush zones” built into vehicle frames. Manufacturers put more development dollars into surviving crashes than avoiding them.

“Night vision cameras—like all pieces of hardware in automated driving—have their benefits as well as their drawbacks,” said Ellen Carey, a spokeswoman for Audi. “This specific technology will need to overcome challenges of cost, field of view, and increased durability to meet the stringent criteria for automation-grade sensors.”

“Auto manufacturers are tough customers,” said John Lester Miller, the CEO and founder of Cascade Electro Optics in Oregon. “They want everything cheap, reliable, and durable.”

It’s all about space

So, it appears that night vision might’ve gone the route of the disc brake, which was introduced to automotive production lines by Triumph in 1956, but didn’t make its way to dominant lines like Chevrolet until the 1970s. That is, if five key US states—California, Nevada, Michigan, Virginia, and Florida—and the District of Columbia hadn’t allowed for the testing of autonomous vehicles on their public roads in 2015. Since then, companies ranging from Apple to Zoox have clocked an estimated two million autonomous miles in California alone. Each manufacturer has faced the same elemental challenge: What else is on the road, and where?

Spatial awareness is everything to the autonomous car, truck or bus.

Consider the monumental setback suffered by one of the key players in the autonomous vehicle game, Uber, on March 18, 2018. Just before 10 p.m. in Tempe, Arizona, that night, an autonomous 2017 Volvo XC90 owned by Uber struck and fatally injured a 49-year-old pedestrian named Elaine Herzberg, who was walking her bicycle across a four-lane road. Inside the Volvo sat Uber employee Rafaela Vasquez, who told investigators from the National Transportation Safety Board (NTSB) that she had been watching a TV show on a dashboard-mounted cellphone. Vasquez believed that she had no reason to watch the road because the Volvo was equipped with seven optical cameras, ten radar sensors, and lidar—the imaging technology that uses near infrared light.

Reacting to a dashcam recording of the accident that was retrieved from the Volvo by Tempe police, Michael Ramsey, a research director with Gartner who specializes in self-driving cars, said: “The video clearly shows a complete failure of the system to recognize an obviously seen person who is visible for quite some distance in the frame. Uber has some serious explaining to do about why this person wasn’t seen and why the system didn’t engage.”

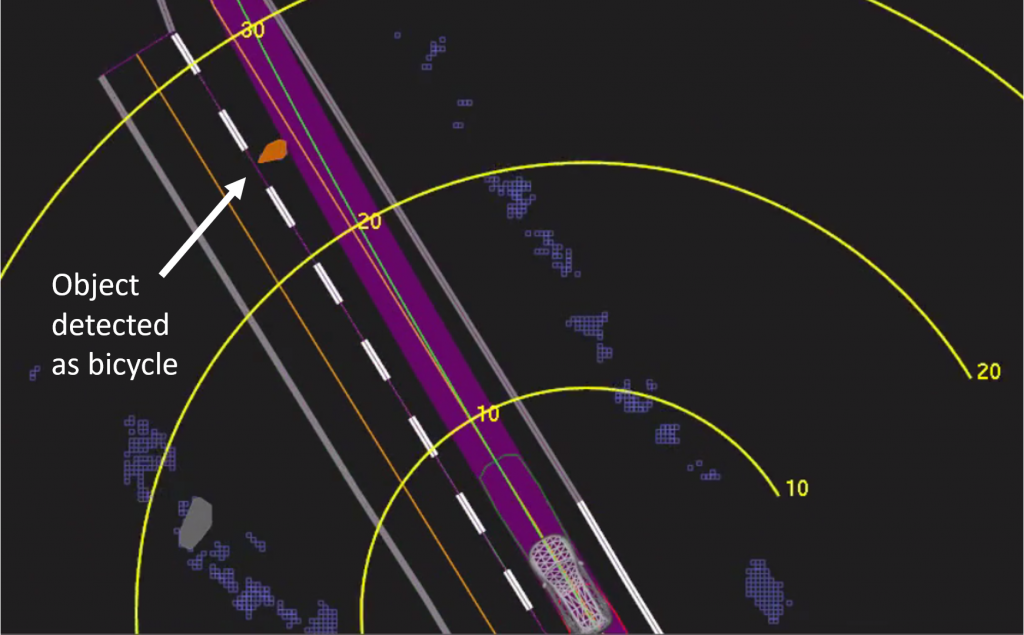

In its preliminary incident report, the NTSB reported that six seconds prior to impact the Volvo was traveling at 43 mph and its onboard system identified Herzberg and her bicycle as an “unknown object.” A moment later, the system identified Herzberg and her bike as a vehicle, and then as just a bicycle. At 1.3 seconds before impact, the system flagged the need for emergency braking. The Volvo hit Herzberg at 39 mph.

Competing, or complementary, technologies

“Since the Tempe tragedy,” wrote Nick Stockton for SPIE, “many experts in the remote sensing community have pointed out that if Uber’s sensor array had included a thermal infrared camera the car likely would have identified Herzberg in time to hit the brakes.”

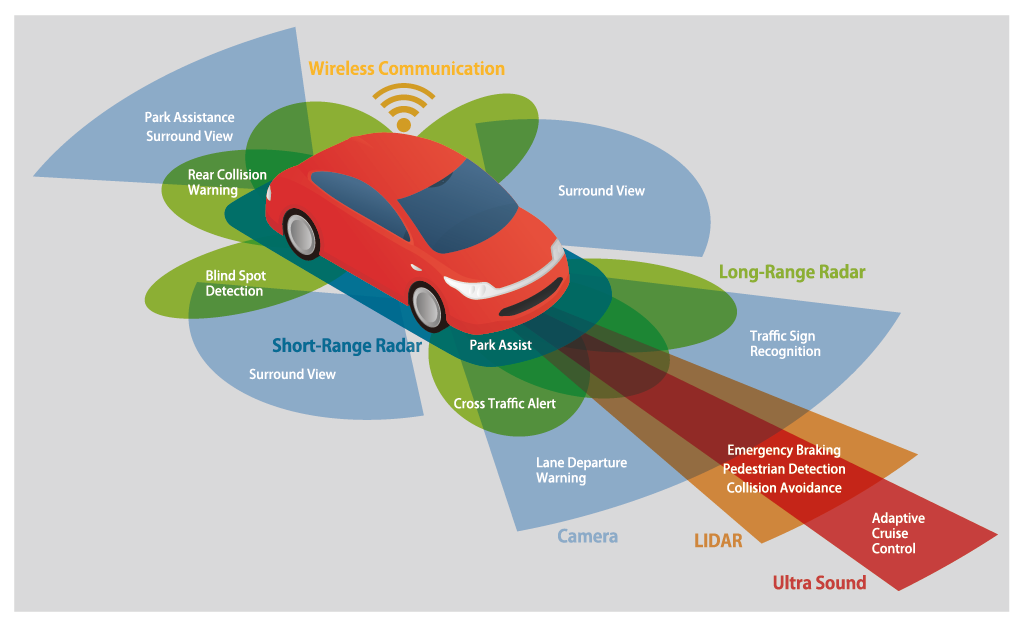

As John R. Quain summarized in a 2019 New York Times article: “Until now, the standard model for autonomous cars has used some combination of four kinds of sensors—video cameras, radar, ultrasonic sensors and lidar. It’s the approach used by all the leading developers in driverless tech, from Aptiv to Waymo. However, as dozens of companies run trials in states like California, deployment dates have drifted, and it has become increasingly obvious that the standard model may not be enough.”

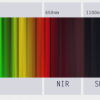

Video doesn’t work well in exceptionally bright circumstances. Radar works well judging movement, but doesn’t provide a clear vision. Ultrasonic sensors don’t work well at a distance. Lidar has similar shortcomings to Toyota’s old Night View technology. And, of course, the artificial intelligence (AI) that autonomous vehicles use to crunch all the incoming information and determine when something, or somebody, needs to be avoided, is only as good as the data it receives.

A number of players, with varying technologies, are hoping to provide a solution, or fill a critical void to link what’s already out there.

In July 2020, Teledyne e2v—part of the Teledyne Imaging Group— plans to launch Hydra3D, a 832×600 pixel time-of-flight CMOS image sensor embedded with a three memory node cutting-edge pixel, tailored for 3D detection and distance measurement in diverse operational conditions. This new sensor will provides very fast transfer times for customers seeking the highest levels of 3D performance, including no motion blur, best-in-class temporal precision, high speed, long range over 10 meters and outdoor operation.

Other groups within Teledyne Imaging offer a combination of infrared and visible imaging that holds promise for the future of night vision. referred to as “fusion imaging.” One fusion camera concept is based on the Calibir infrared camera paired with a low light visible camera that feeds into an image-processing platform (GPU). The application area for this technology ranges from commercial autonomous vehicles to sophisticated defense applications like 360° vehicle situational awareness and unmanned aircraft systems.

The platform is very customizable, supporting a variety of interfaces, lenses, image processing features, and hardware options that serve a wide range of uncooled LWIR imaging applications. The camera series architecture also lends itself easily to customization of cameras and cores, with options including wafer-level packaged devices, smart embedded algorithms, and real-time fusion imaging.

FLIR Systems, which designed BMW’s thermal infrared cameras, is enthusiastic about its new automatic emergency braking system that combines radar and thermal camera data. The Israeli startup AdaSky, the Swedish auto supplier Veoneer, and Seek Thermal, an eight-year-old company in Southern California, are all touting far-infrared camera systems that provide better views in challenging situations. And a company called BlinkAI Technologies, which spun out of the MIT-Harvard Martinos Center of Biomedical Imaging, has launched a project called RoadSight that promises to combine imaging technology and machine learning into a winning solution for autonomous vehicles.

Lidar technology is still in the mix, too. Luminar Technologies and Aurora Innovation, which purchased Montana-based startup Blackmore in 2019, are both pouring resources into high-wavelength versions of Lidar, despite Elon Musk’s infamous put-down of the technology as “lame.”

As Bobby Hambrick, CEO of AutonomouStuff—a large supplier of software and engineering services for the robotics industry—told the New York Times, “Every couple of months there’s a new sensor coming out.”

Whichever technology, or combinations, gain traction with autonomous carmakers, analysts predict it will be a burgeoning market for some time to come. Research and Markets projects the sector will realize a compound annual growth rate of more than 15 percent until 2024, meaning that it will generate revenues of about $3 billion.

Until things shake out, the best way to roll is still to keep your hands on the wheel and your eyes on the road.

Avoiding bumps on the road: how thermal imaging can improve the safety of autonomous vehicles

Avoiding bumps on the road: how thermal imaging can improve the safety of autonomous vehicles  Seeing the unseen: how infrared cameras capture beyond the visible

Seeing the unseen: how infrared cameras capture beyond the visible