How mobile sensors + cloud processing are powering new consumer apps

A Q&A with Ben Dawson on how sophisticated image processing algorithms bring pattern matching to everyday life

Computer vision algorithms have powered industrial inspection system for years. But recent consumer-oriented apps show how far image processing extends into everyday life. Petmatch is a smartphone app that locates pets for sale in your area that look like the photos you give it. And the Firefly app on Amazon’s Fire smartphone claims to recognize 100 million real-world objects…which are then just a click away from your Amazon shopping cart.

Whatever you think of these applications themselves, the pattern recognition and image processing technology behind them is remarkable. Possibility asked one of our resident image processing professionals for the layman’s guide to the basic question of “how do they do that?”

Ben Dawson is Director of Strategic Development at Teledyne DALSA. He earned his MSEE and PhD degrees from Stanford University and was on staff at MIT. His work at Teledyne DALSA includes algorithm development and evaluating new technology.

Possibility: Ben, thank you for sharing your processing algorithm wisdom. Let’s start with the Petmatch app. It takes a photo of your dream pet as input and compares it against images from a database. How do computers do “animal face match,” and what types of algorithms or genius might be behind it?

Ben: Similar to human vision, machine and computer vision systems first ask “where is it” and then “what is it”. The computer first finds your pet’s face using features, such as eye corners and nostrils, which are constant over a wide range of views, pets and environmental conditions. Then a “stretchy” model (Petmatch calls it a “geometric model”) of an animal face is fit to your pet’s face features to say “where is it” in the photo. Image similarity, or “what is it”, might use shapes, color or color patterns to calculate similarity and find an “animal face match” in their image database. Unlike human face recognition, the match doesn’t have to be perfect to be satisfactory…though you would hope for same species at least.

Possibility: What are some of the major challenges with these algorithms/approaches?

Ben: Poor quality input images or images of pet (or human) faces that are not nearly frontal view make the matching process much harder. First, the “stretchy” model can’t match to constant points, such as eye corners and nostrils, if the vision system can’t see them. Second, the database can’t match patterns, such as shape and eye color, if they are distorted or obscured. Lighting can be very important for successful matching.

You want a large database to improve the chances of matching but maintaining and searching that database requires computational resources and constant work. The cloud has the database and the massively parallel computational power to do a rapid search.

Possibility: Why are humans and animals so good at pattern recognition?

Humans and other animals are good at pattern recognition because we have to be in order to survive–it’s a survival skill. Animals that do better at recognizing food and predators survive to make more of animals with better recognition abilities. We only see the amazing results of this long evolutionary process. Among the results are vast computational resources – billions of neurons that process images to allow imaging in a wide range of environments, extract key features and quickly identify things. You need to quickly know whether the thing moving in the grass is your pet or a poisonous snake. Our smartphone apps are useful and entertaining, but I wouldn’t depend on them to make that decision.

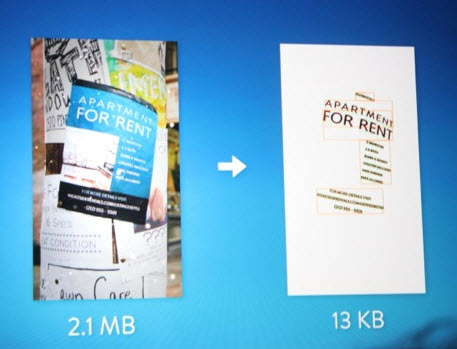

Possibility: The Amazon Fire smartphone and its Firefly app would seem to bring the challenges of pattern matching to another level. It claims to identify millions of objects, read text, and identify video, movies and songs. But it’s not doing all of that on the phone itself, is it?

Ben: As you suggest, the smartphone does some processing to extract key features of a product from images or audio to reduce the amount of data sent to the cloud. Once in the cloud, Amazon uses those features for identification against a huge database. Amazon also has a cloud service, called the Amazon Mechanical Turk, for pattern recognition tasks that require human intelligence. I can imagine the Turk being called in when the computer vision algorithms come up short.

What kinds of “key features” would such a system look for? Based on other work, I’d guess they would start by looking for features that are relatively invariant over lighting and photo angle changes. In discussing pet face matching, we saw that included the corners of the eyes, patterns of color, and perhaps texture. For manufactured products the vision system would be looking for features such as color, texture, and overall shape. Corners are also important features that are invariant over a wide range of lighting and views. If a barcode is in the image, then that gives quick recognition. Unlike face recognition, there is no “stretchy” model that would fit millions of objects, but we can still use the relationships between key features to limit the database search and so make it faster. For example, having found some corners of a product, we can use their geometric ratios as a “meta-feature” to improve search.

As with the pet-matching situation, poor input makes matching harder. In a recent review of the Firefly app, Tim Moynihan of Wired noted that when he used it to identify things out in the real world, “Firefly had trouble when game boxes, CDs, and Blu-ray discs were in those plastic security containers, or when their covers were obscured by stickers and price tags.”

At the Fire product launch, Jeff Bezos also mentioned that the phone uses local context to improve object recognition rate. So, for example, the GPS position information can bias the reading of an address label to where you are. This brings up another important way to improve computer or machine vision: use information that is “outside” of the image to reduce the possibilities of what is “inside” the image. So in Indonesia, something moving in the grass has a good chance of being a snake, but not in Ireland (thanks to St. Patrick and/or the last Ice Age).

Possibility: Are there any other interesting image processing applications hiding in plain sight every day? Do you see other opportunities where image processing algorithms could improve our lives?

Ben: Many cameras and smart devices already use face location (“where is it”) to set focus, color balance, and perhaps exposure. Automated license plate reading for traffic and parking control, tolling and fines, and police work is another every-day image processing application. Nobody likes to get a ticket from a robot, so in many areas the implementation is up for debate, but the technology is certainly available. I think other automotive applications will be less controversial—more and more cars include machine vision applications such as automated parking, lane change assist, driver alertness monitoring (are your eyes starting to blink faster?), backup warning, and collision avoidance (applying the brakes if you are approaching another car or object at too fast a rate). Unfortunately, these advances in automotive safety don’t compensate for the hazards produced by being distracted by your smartphone.