Roll and Rock: Students Compete in Martian Robotic Guidance and Imaging Competition

University Robotics Team Puts on a Show at NASA/National Institute of Aerospace Robo-Ops Competition

Houston, we have a challenge! Each year, the “Rock Yard” at Johnson Space Center in Houston, Texas, is the site of a unique event: the Revolutionary Aerospace Systems Concepts-Academic Linkages (RASC-AL), or Robo-Ops, planetary rover engineering competition hosted by NASA and managed by the National Institute of Aerospace’s (NIA).

During this competition, the best and the brightest graduate and undergraduate university students across engineering disciplines confront a NASA engineering challenge—in this case to build a planetary rover prototype that can navigate simulated lunar and Martian terrains as it performs a series of tasks. Dozens of university teams from across the country submit proposals, but only a select group of eight finalists are chosen to bring their proposed solutions to life, and to deploy them for NASA and industry experts at the RASC-AL Robo-Ops competition.

Mission Control and Remote Guidance

If the Robo-Ops Rock-Yard setting simulates an out-of-this-world environment, so too do the logistics of the competition itself. A three-member student team accompanies each planetary rover to the Rock Yard, preparing the vehicle for launch and resolving any technical issues that arise once on site. A second team remains on campus to act as “mission control.” It’s from mission control that the planetary rover is remotely guided to show what it can do, operators maneuver the rover across disparate ground cover and a varied physical landscape—including sand and gravel pits, and slopes, grades, simulated craters, and strewn rock field conditions—to pick up target rock samples of a designated color and size and then place them in the rover.

While the students at the Rock Yard can get up-close and personal with their rover, their peers in mission control complete tasks guided only by information transmitted via a commercial broadband wireless uplink from sensors and cameras on the rover.

NASA judges score each rover’s performance based on its ability to gather target rocks within the time limitations, as well as on how well each team meets the competition’s other requirements.

Preparing for Launch

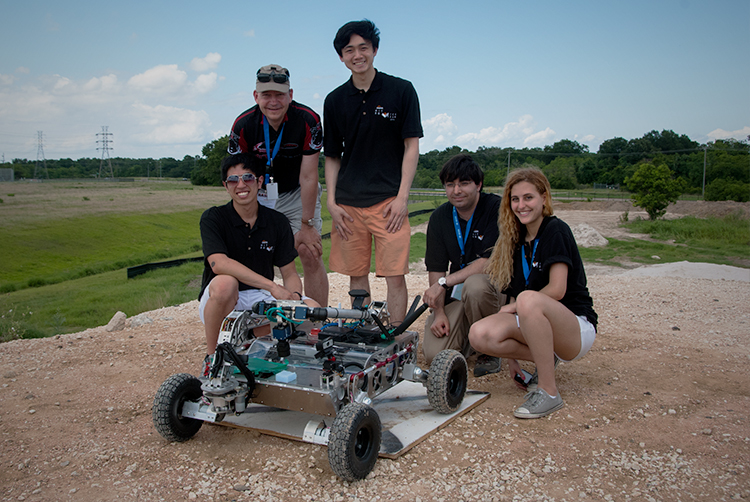

The university’s robotics team was formed in the fall of 2013 and is made up of students from across the globe, including Australia, Canada, China, Morocco, Switzerland, and the U.S. The team’s proposal for Robo-Ops was its first entry in any robotics competition.

“Machine vision technology would give us a broader line of sight, higher performance, and better resolution so that we could target rocks of appropriate sizes and colors more effectively.”

Before the team could make its competitive debut, however, members formulated a rover design that would gain the attention of NASA judges—and a position at Robo-Ops 2014. In doing so, the team faced two key challenges. The first was to design a rover that could handle the rough terrain of the Rock Yard yet be easy to navigate from a remote location; the second challenge was to develop a communications strategy that could work within the limited transmission capabilities available while delivering to mission control the data needed to complete the assigned tasks.

“We knew that the rover would rely on cameras to provide visual guidance to mission control, but we did considerable research to determine exactly what types of cameras we should deploy,” explains a junior in the university’s aerospace program. “Our design included a basic webcam, which could give the operator in the mission control ‘pilot seat’ real-time, low-resolution impressions of the area immediately surrounding the rover. This would allow the pilot to operate the boom to pick up a rock and confirm that it had been placed in the basket on the rover. Then, we wanted to complement the webcam functionality with some type of machine vision technology that would give us a broader line of sight, higher performance, and better resolution so that we could target rocks of appropriate sizes and colors more effectively.”

One of NASA’s goals for Robo-Ops is that members of the community will be involved in the competition, so the robotics team approached a number of vendors to request support. The team received an enthusiastic response from Teledyne DALSA. “These students presented us with a very challenging vision problem, and it’s always exciting to engage with bright minds to explore new opportunities for innovation,” notes Ben Dawson, director of strategic development at Teledyne DALSA. “In a lunar- or Martian-type environment, like that replicated in NASA’s Rock Yard, there is no way to control for many of the variables we typically address when designing more traditional machine vision systems, such as background color, lighting, or part shape or location. For example, the rover might traverse a landscape that goes from light-colored gravel to dark sand to an even darker ‘crater,’ or the weather could change from bright sunlight to an overcast sky, and there is no way to account for these changes ahead of time. Plus, the task is to identify and pick up rocks of different sizes and colors—from two to eight cm. and in all the colors of the rainbow—another variable for which designers can’t really plan in advance.”

Do You See What I See?

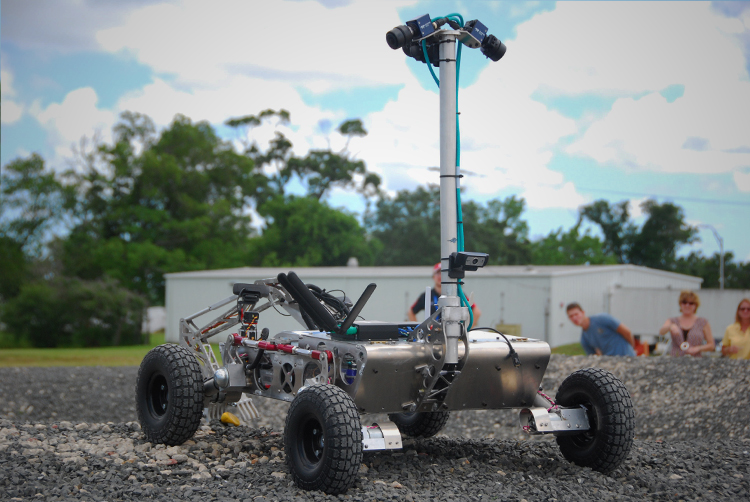

Working in collaboration with Teledyne DALSA, the robotics team chose to use three BOA Pro Smart Cameras with wide angle lenses, which they mounted on a thin, mast-like boom on the back of the rover for a field of view that captures images to the left, center, and right of the vehicle. Sherlock Embedded machine vision software integrated into the BOA cameras uses vision algorithms set by the team to detect rocks and alert mission-control operators when a target rock is spotted.

The BOA also JPEG compresses the images to save bandwidth to mission control. “Images from these BOA cameras are sent via a high-resolution feed to three monitors in mission control, which are manned by a team member in the ‘spotter’ seat,” Baird explains. “Once the spotter confirms the accuracy of the image, the pilot is able to maneuver the rover and scoop up the rock.” The combination of compressed, high-resolution BOA images with low-resolution webcam images reduced the bandwidth required for communication from Houston to mission control in Cambridge, Massachusetts.

“Knowing the capabilities of the camera was critical when we picked the BOA for our application, but the camera’s small footprint was equally important,” a member of the robotic team says. “Each rover must meet overall size and weight limits: it must weigh no more than 45kg and fit in a box that measures one meter square by one-half meter high. The BOA is extremely lightweight, so the boom is easily able to support three cameras without the need to change the rover design.” In addition, the BOA is rugged and can withstand the conditions of the “space-like” environment.

Mission Accomplished

This team placed second in 2014 Robo-Ops, identifying and picking up 56 target rocks—a result they view as a fitting end to their first-ever competition. Plus, with time to refine their rover before next year’s contest, for this group of talented students, the sky’s the limit.

Imaging with the Chandra X-ray Observatory: Radiation, CCD Arrays, Workflows, and Space Cats

Imaging with the Chandra X-ray Observatory: Radiation, CCD Arrays, Workflows, and Space Cats  The Rubik and the Robot

The Rubik and the Robot