The State of the Race: 3D Imaging and Artificial Intelligence Accelerate at Tokyo 2021

By observing the fastest humans in the world using sophisticated 3D imaging systems, we might better understand what makes them the best

Understanding human motion has fascinated us since the earliest cave paintings. Aristotle wrote about it. Leonardo da Vinci tried to capture it in sketches. The earliest motion pictures sought to understand it in detail. What’s the difference between a walk, a jog, and a run? Motion studies have branched out as an investigative and diagnostic tool in many fields, from medicine (physical therapy and kinesiology), sports, and even surveillance. What makes us fast? What makes us healthy? What makes us unique?

Human motion analysis can be divided into three categories: human activity recognition, human motion tracking, and analysis of body and body part movement. Most efforts in this area rely on sequences of static postures, which are then statistically analyzed and compared to modeled movements.

Gait analysis is also used in sports to optimize athletic performance or to identify motions that may cause injury or strain. Compared to other systems, tracking systems for capturing high-performance athletes can’t use physical markers for optical analysis—anything that interferes with natural movement could ruin the results. Much more work needs to be done on the system side to interpret what’s happening in front of the cameras.

One of the most exciting venues to study how people move is where they move best: The Olympics

3D High-Speed Imaging of Very Fast Humans

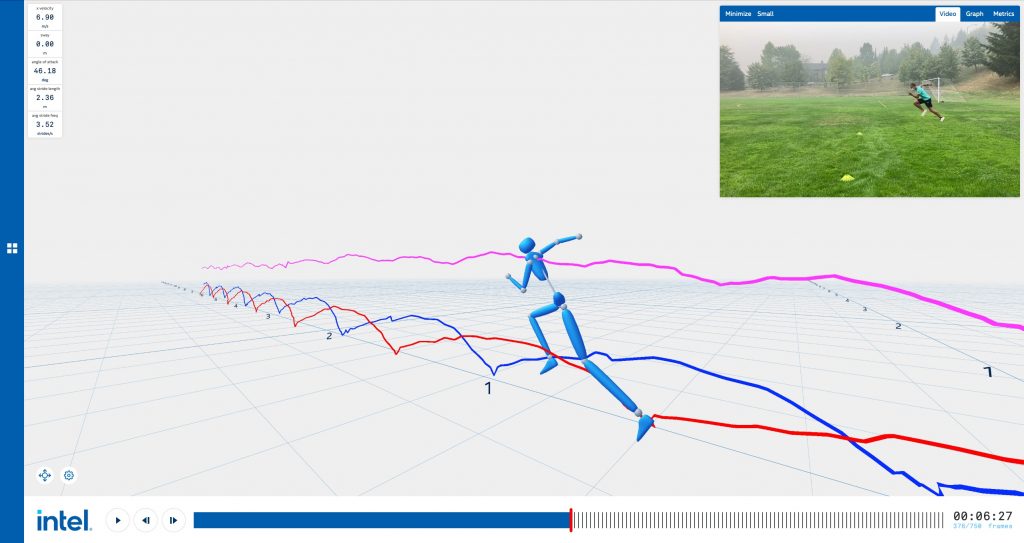

One of the most notable imaging systems at the Tokyo 2021 Olympics had to be 3D Athlete Tracking, or 3DAT. This is a first-of-its-kind platform developed by Intel and Alibaba that combines AI and computer vision motion tracking to analyze how athletes perform in the 100-meter, 200-meter, 4×100-meter relay and hurdle events. The system captures feeds from four digital cameras on pan-tilt mounts cameras installed at the Olympic Stadium.

The live footage is then fed to a cloud-based artificial intelligence program that applies pose estimation and biomechanics algorithms to extract 3D form and motion from athletes. It then applies deep learning to analyze each athlete’s movements, identifying key performance characteristics like top speed and deceleration.

To properly train their AI, the Intel team needed as much video footage as possible. But much of the pre-existing footage wouldn’t work, since it was simply average people in motion. Not elite athletes. “People aren’t usually fully horizontal seven feet in the air,” said Jonathan Lee, director of sports performance technology in the Olympic technology group at Intel, to Scientific American, “but world-class high jumpers reach such heights regularly.”

As a result, the Intel team needed to collect their own footage of elite track and field athletes in motion for their AI training set. They then manually annotated every part of the body—eyes, nose, shoulders, etc. From this, the AI could construct a 3D model that could map to the changes in an athlete’s body as it moves.

Teams will be able to use this data to better train their athletes, and broadcasters hope to make better and more informative television.

Elite Athlete Training Centre

This will also facilitate more in-depth post-race analysis, revealing information that the audience might have missed during the race itself. When was the exact moment each athlete reached their top speed, how long did they hold that speed? How might that have affected the outcome?

Because this is a live, global broadcast, the system must work very quickly. Intel says it works in “near real time,” relying on specially-optimized AI and computer vision hardware and software. From capturing the footage to broadcasting the analysis, takes less than 30 seconds.

Custom AI Systems for a One-of-a-Kind Event

3DAT was actually just one of several AI-powered imaging systems that were deployed at the Olympics this year. They all have a common source: As host broadcaster, Olympic Broadcasting Services (OBS) acts as the storytellers and visual innovators of the Games. They build the imaging systems to capture the sporting events and then in turn deliver that footage to broadcasters around the world. They’re able to build and test systems that local broadcasters can’t. And more and more, artificial intelligence is involved.

At the beginning of this year’s Games, OBS expected to produce more than 9,500 hours of content, of which approximately 4,000 hours would come from live coverage. Managing that amount of data for time-sensitive delivery is simply impossible. But it’s only now that they can turn to artificial intelligence to analyse, classify, and even “understand” the footage. Tagging, or logging as it is usually known in the broadcast industry, is a first important step for a wide number of important processes in video and audio.

In Tokyo OBS will be testing additional AI prototypes that will try to identify which athletes are appearing where and when. Broadcasters want to use the footage that feature their national athletes, but searching specific content through hundreds of hours of footage is a laborious task if the content is not densely logged.

This kind of video and audio recognition could be a starting point of many interesting and useful applications for the world of sports content production: from live automated video switching, to fully automated highlights. While these technologies exist for some sports, those events are simpler to cover than these Games. In more ways than one, the Olympics is the true proving ground for the best of the best.

3D Imaging is coming to the Tokyo 2020 Olympics

3D Imaging is coming to the Tokyo 2020 Olympics  Real-Time 3D Replays Bring Fans Closer to Action

Real-Time 3D Replays Bring Fans Closer to Action