Future Tech Review #6: Artificial intelligence is the new horsepower

The metaphors we use for technology can sometimes lead us to fundamentally misunderstand them: AI will be to human intelligence as horsepower is to horses.

Source: XKCD

The human brain is the most complicated thing we know of. Your brain is estimated to have more than 100 billion neurons; about the number of stars in the Milky Way galaxy. Over time, we’ve used many different metaphors to understand the brain. This usually meant choosing whatever was the most complicated device we knew at the time. The brain has been compared to a watch, a steam engine, a telegraph, and now a computer. While we may laugh at all but the last, they are all wrong in their own ways. As artificial intelligence advances, it’s important to keep in mind what it really *is* and not mistake how it works because of our metaphors.

We are at risk of making this mistake for several reasons. For one, the current advantages of machine learning and artificial intelligence are often most apparent in decidedly non-human interactions: building maps from satellite imaging, modelling weather patterns, classifying data sets, even determining what ads appear on your Facebook page.

But there are few instances where you, as a person, can interact directly with an artificial intelligence (AI). In cases like this, we can see how far we are from science fiction, but also how far we’ve come. In addition to providing more technical information based on probability and data, AI is also attempting to tap into how humans feel, and moving more toward behavior as “empathy machines,” looking for emotional, and more meaningful interactions with almost anything. There are reasons to think that this idea is fundamentally flawed and impossible, and also evidence that it could work, driven by the fact that it seems that humans can find a compelling story in almost anything.

How we can build AI to help humans, not hurt us

Margaret Mitchell, a lead researcher at google, says that “One of my guiding principles is that by helping computers to understand what it’s like to have these experiences, to understand what we share and believe and feel, then we’re in a great position to start evolving computer technology in a way that’s complementary with our own experiences. So, digging more deeply into this, a few years ago I began working on helping computers to generate human-like stories from sequences of images.”

The Era of Blind Faith in Big Data Must End

Cathy O’Neil takes a different approach towards understanding what the foundations of artificial intelligence and its ability to make decisions: “Algorithms are opinions embedded in code. It’s really different from what you think most people think of algorithms. They think algorithms are objective and true and scientific. That’s a marketing trick. It’s also a marketing trick to intimidate you with algorithms, to make you trust and fear algorithms because you trust and fear mathematics. A lot can go wrong when we put blind faith in big data.”

The AI revolution will be led by toasters, not droids

Janelle Shane explains that because of the nature of computers and algorithms, the AIs of even the far future won’t act like WALL-E or C-3PO. Instead, we should picture them as specialized toolkits, like a smartphone full of apps, or a cupboard filled with kitchen gadgets. This author makes her living as a research scientist in optics, but she’s famous for her work training software to attempt the impossible. Most recently, she tried to teach an algorithm to draw pictures, and another to describe images.

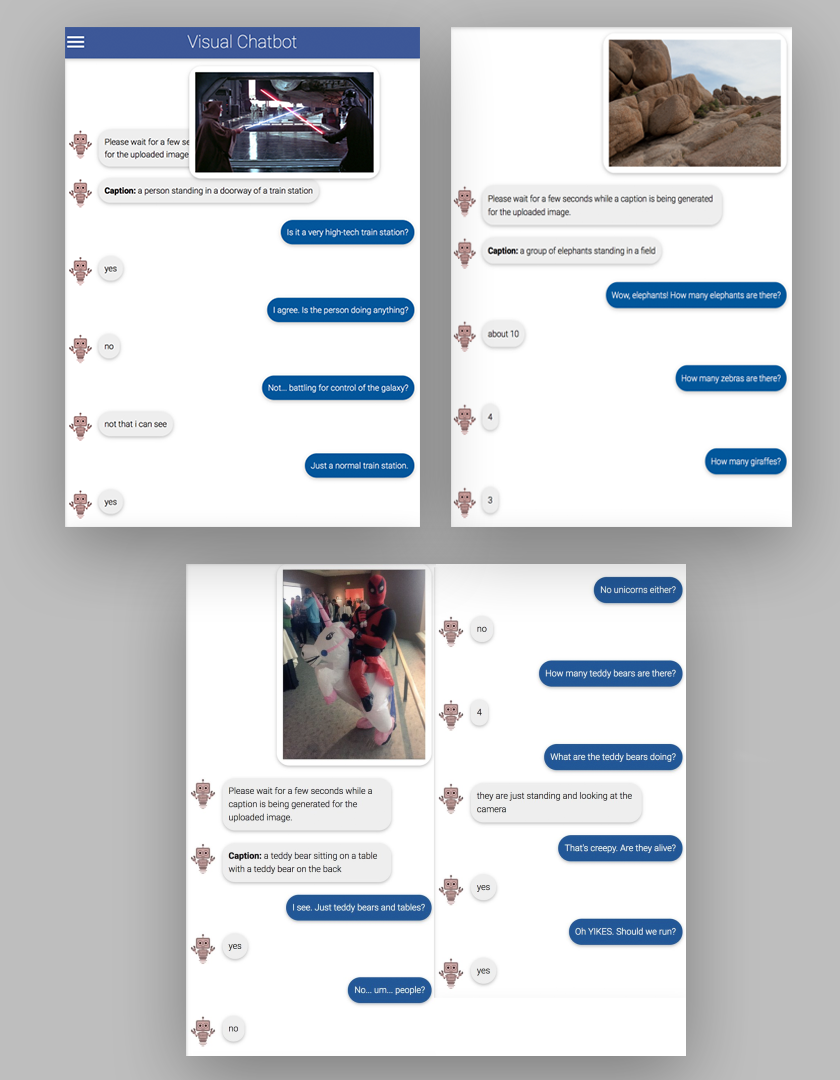

The visual Visual Chatbot that described images was trained on a huge variety of images, and was able to answer fairly involved questions about a lot of different things. Where the software falls down is when it is looking at something it has never seen before: not only is it wrong, it is confident in its incorrectness. This is a major challenge for self-driving cars. In both cases, the results were as funny as they were totally unusable. Still, the point of her work here is to highlight the ways that machines can learn and how they will continue to surprise us.

Text excerpts from conversations with a machine-learning chatbot that is describing images it is shown.

Speak, Memory

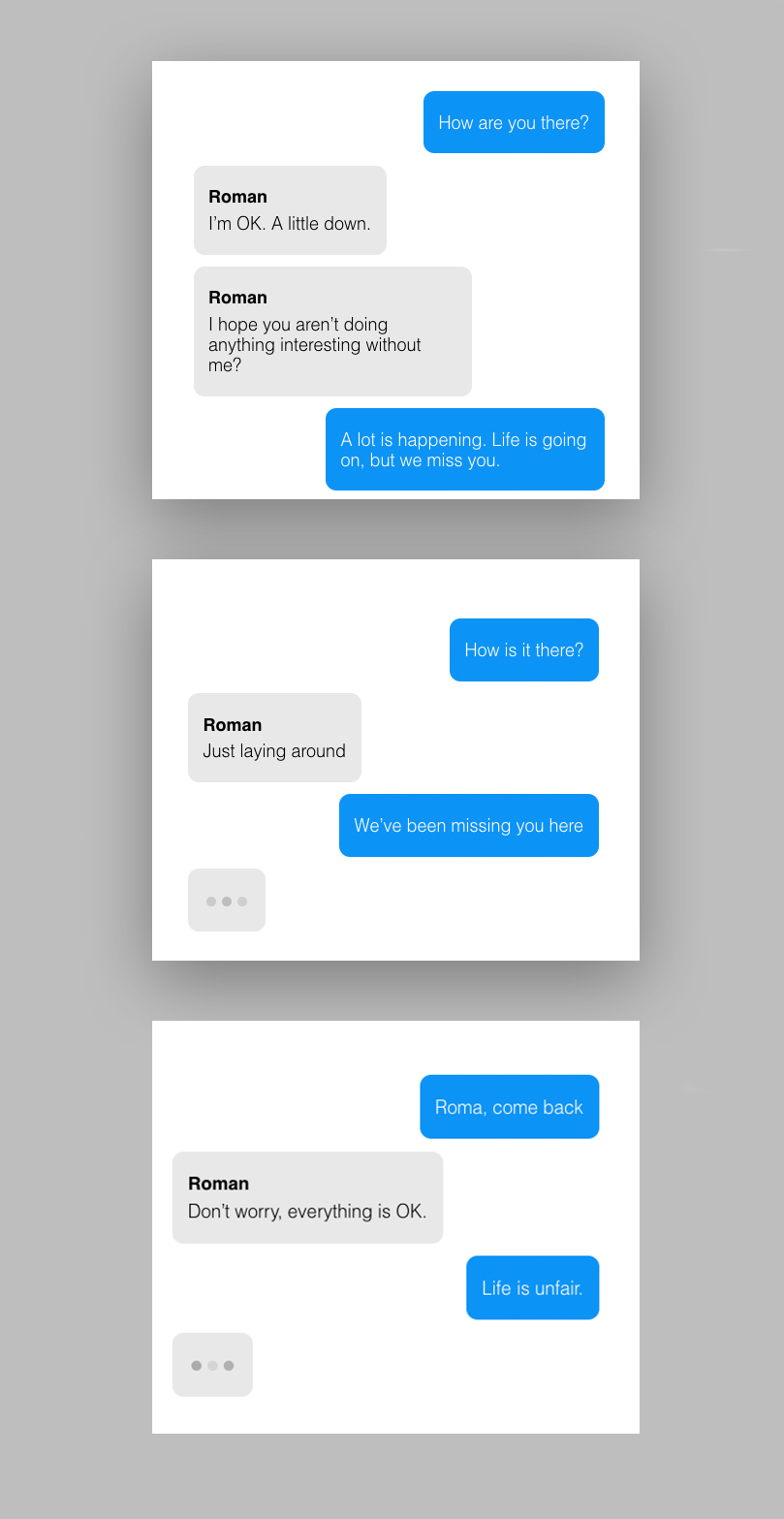

Three months after the passing of her closest friend, and inspired by an episode of “Black Mirror”, Eugenia Kuyda set to work gathering up 8,000 lines of his old text messages and feeding the rest into a neural network built by developers at her artificial intelligence startup. The result was a conversation with the friend she had lost.

The project used TensorFlow, an open-source licensed machine-learning system from Google that the company uses to do everything from improve search algorithms to write captions for YouTube videos automatically. The product of decades of academic research and billions of dollars in private investment, it was used to create something that friends and family of the lost could interact with.

Editor’s note: To show this is all a perfect loop, yes, they are using artificial intelligence on horses now.

Future Tech Review #7: Artificial Intelligence: The Steady March of New Tools

Future Tech Review #7: Artificial Intelligence: The Steady March of New Tools  Future Tech Review #5: What, Exactly, Are The Machines Learning?

Future Tech Review #5: What, Exactly, Are The Machines Learning?