Take it from the bees: Visual guidance in contradictory pathfinding scenarios

Research on understanding how bees navigate is helping us build better autonomous robots

There is no one best strategy to find your way. Even web-based mapping services rarely agree. Without tools or specialized knowledge, humans are quite likely to get lost in a natural environment.

In contrast, some of the most impressive performances in the natural world are the navigational abilities of insects. With relatively small brains combined with very specialized vision systems, many can reliably travel long distances toward specific targets with astonishing accuracy. They do this through a very optimized strategy for combining sensory input and processing, developed through the slow and steady design of evolution – maybe 50 to 100 million years-worth. Scientists recently solved how monarch butterflies navigate their migration over thousands of miles by synthesizing very specific pieces of visual information.

Scientists have increasingly explored nature’s systems for inspiration on how to solve challenges in artificial intelligence (both optimistic and more skeptical), engineering (such as sensor design), and robotics. Of particular interest are bees and ants, which rely mainly on learned visual information about their surroundings to find food and hidden nest entrances. In 2019, researchers published a paper on a terrestrial robot built using insights from desert ants. These types of ants were of particular interest because they rely heavily on visual information; the desert climate destroys the pheromones that ants in other climates use to find their way. For airborne robots, scientists have looked to bees, which must operate in a more complex 3D environment. Insect-inspired flying robots have potential uses in crop pollination, search and rescue missions, surveillance, and environmental monitoring.

Study of both species has been a fruitful intersection of insect biology and robot systems research. For the former, the results obtained from these experiments provide support for understanding underlying biological models. For the latter, the efficient navigation processes of insects point toward computationally cheap navigation methods for mobile robots.

RoboBee: Controlled flight of a robotic insect from the Wyss Institute and Harvard University. Smart sensors and control electronics that mimic the eyes and antennae of a bee, and can sense and respond dynamically to the environment.

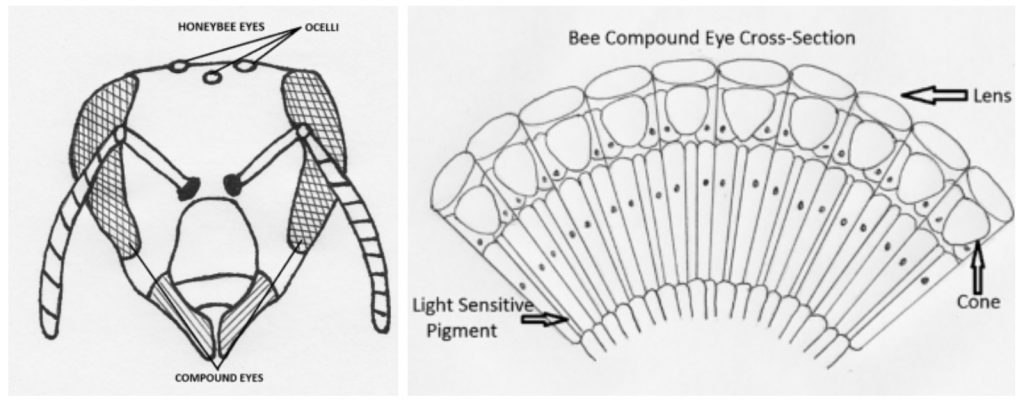

How bees see

Nobel prizewinner Karl von Frisch was one of the first to investigate the sensory perceptions of bees, publishing his first work on the subject in 1927. Bees use many sensory inputs to find food and navigate, by the sun, by the polarization of light from the sky, and by the earth’s magnetic field. Bees see in color, and use some of this information to help find food and their way home. Later research, published in 1976, found that like humans, bees have receptors in their eyes that can detect three different colors. But instead of a red-green-blue, they instead are sensitive to green-blue-UV. The three receptors contain pigments which have maximal absorbance at wavelengths of 350 nm, 440 nm, and 540 nm. Researchers also found that most cells had secondary sensitivities that correspond to wavelength regions at which the other two receptor types were maximally active. He used spectral efficiency experiments to suggest these secondary sensitivities result from electric coupling between the receptors.

Bees can also easily distinguish between dark and light – making them very good at seeing edges. This helps them identify different shapes, though they can have trouble distinguishing between similar shapes that have smooth lines – such as circles and ovals.

Light scattered in a blue sky forms a characteristic pattern of partially polarized light which is dependent on the position of the sun. Bees can detect this polarization pattern because each unit in their compound eye has a UV receptor, but with UV filter oriented slightly differently in each. A small piece of blue sky is enough for a bee to recognize the pattern changes occurring over the course of a day. This provides both directional and temporal information.

Building a better understanding of how bees think

While we understand the sensory capabilities of bees, we still have a lot to learn about the visual processing and learning that allow them to navigate, find flowers and find their nest. In his wonderful “A short history of studies on intelligence and brain in honeybees,” ethologist Randolf Menzel lays out the story of how humans came to study, understand, and even misunderstand bees. “Quantifying intelligence has been an obsession in the nineteenth century,” says Menzel. This focus on directly comparing brain structure and behavior patterns to those of humans and similar animals created a bias and blind spot that bee science is still recovering from. New research methods and experimental design have led to important discoveries in the diverse and complex behavioral flexibility of the bee.

In the spirit of this new age of discovery, researchers at the University of Bielefeld in Germany looked at the homing behavior of Bombus terrestris, the buff-tailed bumblebee. B. terrestris is one of the most numerous bumblebee species in Europe and one of the main species used in greenhouse pollination around the world. The species’ role as a key commercial pollinator in Europe has driven researchers to investigate how a changing agricultural landscape has affected the foraging and survival of this species.

Despite losses of habitat and biodiversity, this bee is still widespread, likely because it can forage over very long distances, making it less sensitive to changes in its environment. B. terrestris displays an impressive homing range, where individuals displaced from their nests can relocate their colony from as far as 10 km away. This can be a tedious process for the bee, with a successful return often lasting several days. This indicates that bees perform a systematic search for a combination of visual cues to find and evaluate familiar landmarks to find the nest. The researchers sought to improve our understanding of this process in terms of what we know about established homing algorithms.

Homing algorithms that can imitate bee-havior

Previous vision experiments in simplified environments show that bees combine three distinct types of memory-based guidance. Two of these are types of image-matching, sometimes called ‘snapshot memories’ of the surrounding environment. These encodings seem to extract elementary features (such as shapes, edges, and orientation) with the context of their spatial relationships. The third mechanism, path integration, uses cues derived from an insect’s own movement.

In each case, an insect compares its current sensory input with a memory of the desired sensory input. The algorithm uses the difference between the two to generate a desired direction to the goal. Together, these guidance mechanisms allow bees (and other insects) to learn complicated visually-guided routes and forage far from their nests.

Similarly, common homing algorithms rely on saved visual information linked to the home location. When returning, the agent compares this memory information with the visual input from the surroundings, leading to a homeward direction vector.

The researchers tested several models to compare with the average performance of their test bees:

The Test Environment

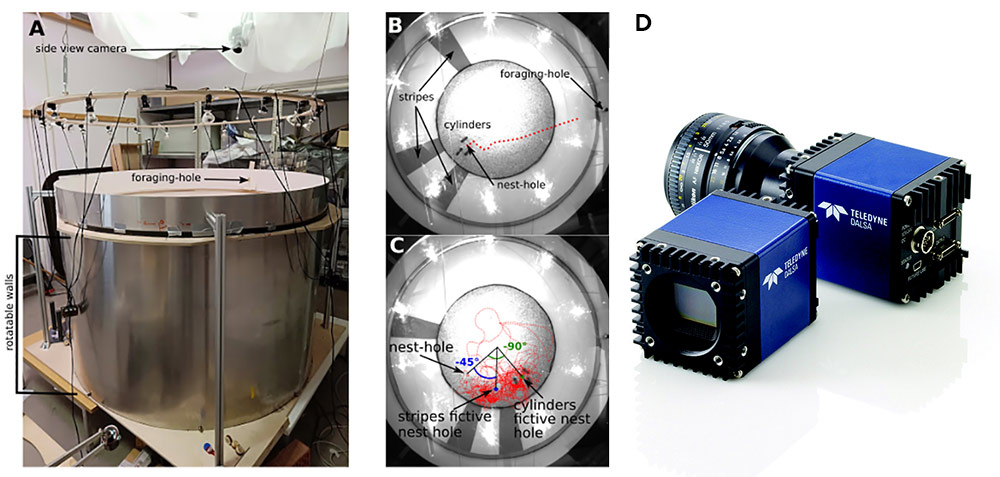

The test beehive was connected to a foraging arena via transparent tubing system to a flight arena. The bumblebees could leave the arena and access a foraging chamber through a 1.5 cm diameter hole in the fixed part, where feeders provided them with a sweet aqueous solution.

In the foraging arena, a set of visual cues were provided to the bumblebees, consisting of a three black cylinders. A second set of red stripes were placed for tracking purposes, as bees are not sensitive to this end of the spectral range. A white mesh cloth covered the ceiling of the room to restrain access to external cues.

Each bumblebee flying from the foraging chamber back to the hive was recorded by two synchronized high-speed cameras (Teledyne DALSA Falcon2 4M) at 74 frames per second. Set at different angles, the cameras gave a top-down and angled view of the arena. Ten LEDs (Osram 350 lm) illuminated the arena, and 16 paired neon tubes (Osram Biolux 965) arranged symmetrically in the room served as additional light sources.

Recordings lasted up to 5 minutes or until the bee found the nest. Output from the cameras tracked the bees’ position using a custom-made Python script based on OpenCV. The videos were then manually reviewed with IVtrace open-source software to correct the bee’s position in the case of a tracking error.

A: The setup consisted of a cylindrical flight arena, a suspended ring holding part of the lighting, and a white mesh covering the framework holding the cameras

B: Habituation condition, image from the top view camera during recording, with two of the three stripes on the arena wall placed behind the nest hole and three cylinders placed around the nest. Overlaid trajectory of a return flight during the habituation phase, red dotted line.

C: Picture of a test condition, cue conflict condition -45/-90; the cylinders’ fictive nest, green circle, is placed at an angle of -90˚ from the nest hole viewed from the centre of the arena, then, the stripes’ fictive nest, blue circle, at -45˚ from the nest hole. The picture is overlaid by one sample trajectory corresponding to this cue conflict condition (red dotted line).

D: Teledyne DALSA Falcon2 4M area scan cameras incorporate large resolutions, fast frame rates, low noise levels enabling high speed image capture in light-constrained environments. Global shuttering removes image smear and time displacement artifacts related to rolling shutter CMOS devices.

Understanding how bees navigate using visual information: comparing homing algorithms to reality

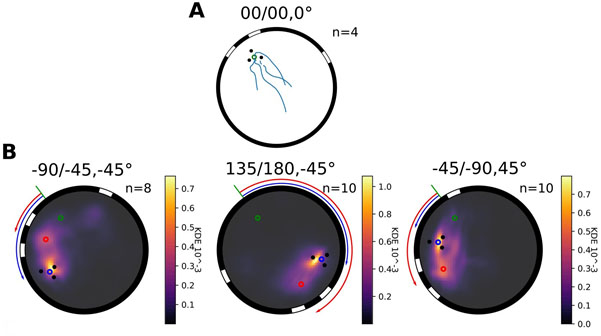

Under normal circumstances, the bumblebees were able to fly directly back to the nest each time.

Once the environment was altered, the bees would begin searching for their home at one of the two locations indicated by the visual constellations cues. This was either-or, they didn’t search a “middle” compromise location, confirming that this task was lead by visual cues.

In the tests, the ALV and single-snapshot models failed to reproduce the homing behavior of the bees they studied. Overall, the nature, number, spatial distribution and altitude of the views kept in memory were found to be crucial in the performance of the multi-snapshot models. Ultimately, the researchers found that a holistic model that combined multiple snapshots with 3D layout information of the environment best matched the performance of the bees they studied.

Probability distribution of bumblebees’ search location in the arena during conflict situations. Each subplot represents the arena with the different cues; the three stripes and the three cylinders, and the corresponding fictive nest entrance; red and blue. The real nest hole is represented by a green dot. Rotations inflicted to the different cue-constellations are represented by an arrow of the corresponding color. A shoes the trajectories of bumblebees when the environment is unchanged. For all the other subplots, the colourmap represents the normalized KDE of the flights distribution from low density (black) to higher (yellow).

Looking around, heading forward

What we learn from bees can help in agricultural planning and even in how beekeeping is performed. Beyond that, the potential for more refined 3D navigation algorithms is directly applicable to urgent challenges in the development of fully autonomous robots. Navigation systems require reliability, repeatability, and robustness.

Today, vision-based systems are used in mobile platforms, but with some limitations. Simultaneous localization and mapping (SLAM) methods are widely used in autonomous cars, planetary rovers, and unmanned aerial vehicles (UAVs), working both indoors and outdoors. SLAM algorithms can be very precise, but the computing costs are extremely high and require consistent brightness, which seldom occurs in outdoor environments. Laser detection and ranging (Lidar) methods also provide high-resolution maps for autonomous car and robot navigation, but have significant physical requirements as well as high processing requirements.

As a robot navigates its environment, it must compose a data model or “map” of its surroundings, using data from available sensors. This map is the robotic equivalent of what we might call a picture in our mind’s eye. But whereas our human “map” draws on sensory information, a robot relies on data fused from depth cameras and/or LIDAR using the SLAM algorithm. Courtesey of Shaojie Shen

Hence the need for both accurate and efficient visual navigation approaches. The resources in terms of power, memory and processing is often very constrained in mobile robot platforms. But a robot that could find its own way, that isn’t vulnerable to outages in wireless communications and Global Positioning System (GPS) transmissions, would be able to operate in new, challenging spaces, such as natural disasters or extraterrestrial environments.

Undersea Visionaries: How the Mantis Shrimp is Changing the Way We See the World

Undersea Visionaries: How the Mantis Shrimp is Changing the Way We See the World  A Team of Minions Builds their Robot Leader

A Team of Minions Builds their Robot Leader